Part 7: SQL Database Interview Questions and Answers

This chapter explores SQL Database questions that .NET engineers should be able to answer in an interview.

The Answers are split into sections: What 👼 Junior, 🎓 Middle, and 👑 Senior .NET engineers should know about a particular topic.

Also, please take a look at other articles in the series: C# / .NET Interview Questions and Answers

🧠 Core Concepts

❓ What is normalization, and when would you denormalize your schema?

Database normalization organizes data into related tables to reduce duplication and maintain consistency.

Each fact is stored once, and relationships define how data connects.

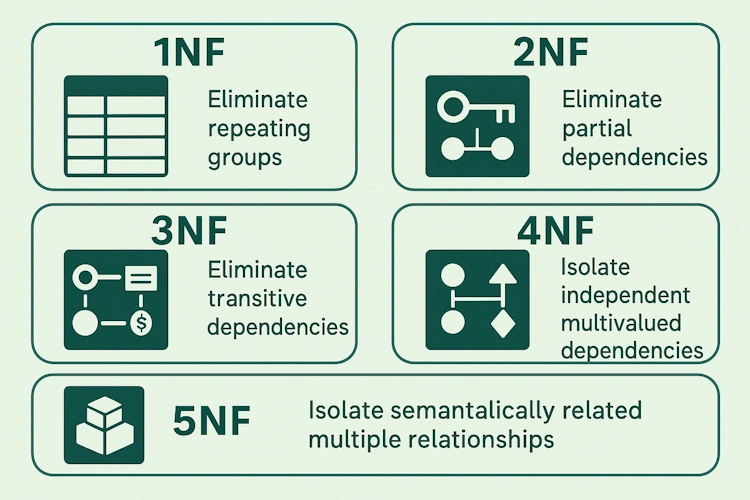

Normalization occurs in stages: Normal Forms (1NF to 5NF), each with stricter design rules that eliminate redundancy.

When to denormalize?

Normalization improves consistency, but it can slow down reads because it requires joins.

Sometimes you denormalize for performance, for example:

- Reporting dashboards that repeatedly join multiple large tables.

- E-commerce product listings where you store the category name inside the Products table to avoid joins.

- Analytics systems where query speed matters more than storage efficiency.

In modern systems, normalization is primarily a logical design principle, while denormalization is often implemented physically via materialized views, caching, or summary tables rather than manual duplication.

What .NET engineers should know about normalization:

- 👼 Junior: Know normalization avoids duplicate data and organizes tables by rules (1NF, 2NF, 3NF…).

- 🎓 Middle: Understand trade-offs: normalized data is consistent, but denormalized data is faster to query, with the risk of duplication.

- 👑 Senior: Decide where to denormalize (e.g., caching, read models, reporting) and design hybrid models that balance performance and maintainability.

📚 Resources: 5 Database Normalization Forms

❓ How would you explain the ACID properties to a junior developer, and why are they important?

ACID defines the guarantees that a transactional database provides to ensure data correctness and reliability.

It stands for:

- Atomicity — a transaction is all-or-nothing. Example: when transferring money, either both debit and credit succeed or neither does.

- Consistency — every transaction maintains the database's validity in accordance with rules and constraints (no orphan records, no negative balances).

- Isolation — transactions running at the same time behave as if they were executed sequentially.

- Durability — once a transaction is committed, its changes survive crashes or power loss.

Without ACID, data could be lost or duplicated, e.g., charging a user twice or creating inconsistent order records.

What .NET engineers should know:

- 👼 Junior: Know that ACID keeps database operations safe and consistent.

- 🎓 Middle: Understand how transactions enforce atomicity and isolation, and when to use them in business operations.

- 👑 Senior: Tune isolation levels, handle concurrency issues, and design systems that balance strict ACID with performance (e.g., when to use eventual consistency).

📚 Resources: ACID in Simple Terms

❓ What does SARGability mean in SQL?

SARGability (Search ARGument Able) describes whether a query condition can efficiently use an index.

A SARGable query allows the database engine to perform an Index Seek rather than scanning the entire table.

✅ SARGable example

SELECT * FROM Orders WHERE OrderDate >= '2024-01-01';❌ Non-SARGable example:

SELECT * FROM Orders WHERE YEAR(OrderDate) = 2024;In the second query, the YEAR() function blocks the use of the index on OrderDate, forcing a full scan.

The idea is to write conditions so that the column stands alone on one side of the comparison - no functions or complex expressions applied to it.

I usually check execution plans for “Index Seek” vs “Index Scan” to spot non-SARGable patterns.

What .NET engineers should know:

- 👼 Junior: Understand that SARGability helps SQL use indexes efficiently instead of scanning all rows.

- 🎓 Middle: Avoid functions, calculations, or mismatched types in WHERE clauses that block index seeks.

- 👑 Senior: Analyze execution plans and ORM-generated SQL (e.g., EF Core LINQ queries) for non-SARGable patterns; design indexes and predicates together for high-performance queries.

📚 Resources: What Is Sargability in SQL

❓ What’s the difference between a WHERE clause and a HAVING clause? Can you give a practical example of when you’d need HAVING?

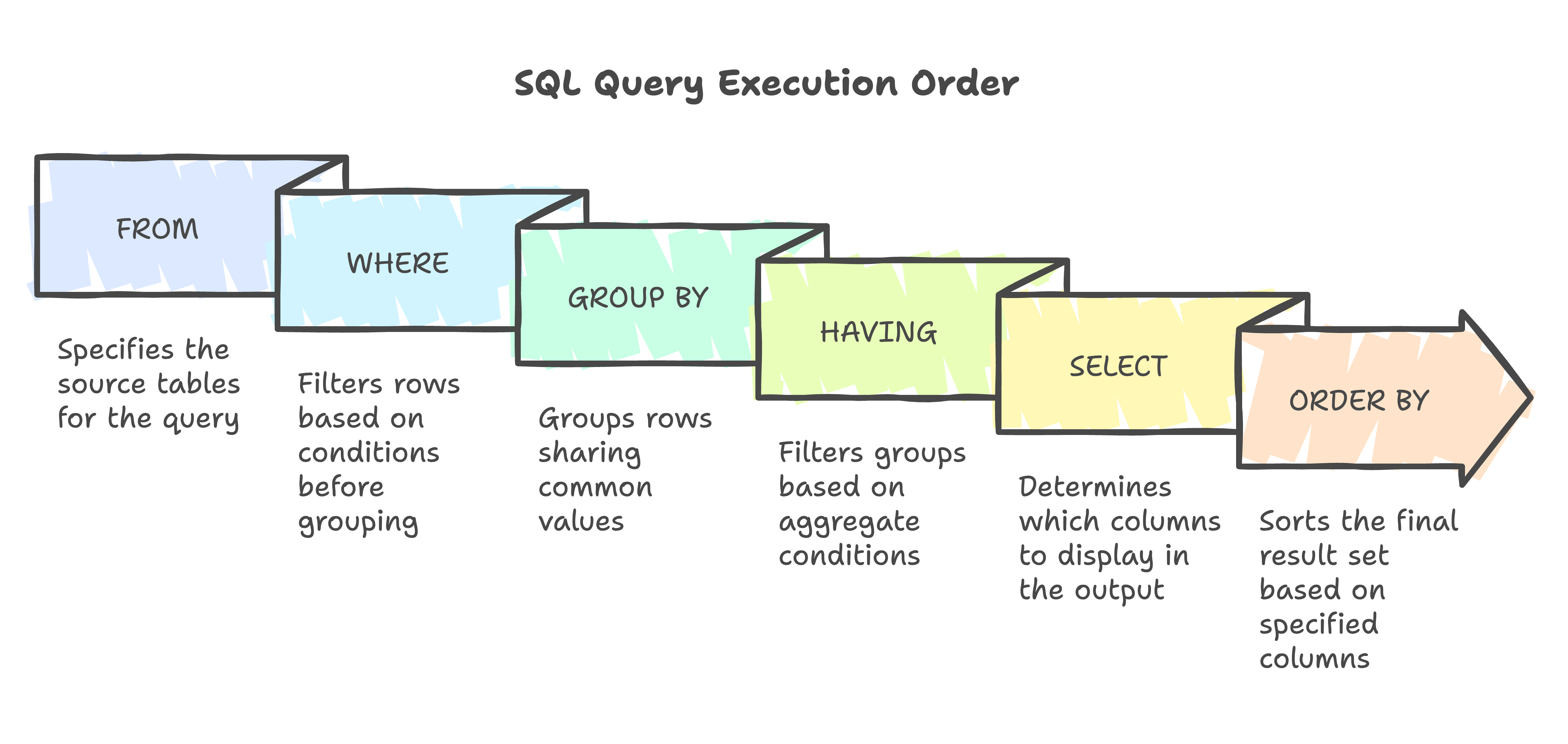

The difference lies in when each filter runs during query execution:

WHEREfilters rows before grouping/aggregation. “Give me all orders placed in 2025.”HAVINGfilters groups after aggregation. “Give me all customers who placed more than five orders in 2025.”

So if you’re working with raw rows, use WHERE. If you’re filtering based on an aggregate like COUNT(), SUM(), AVG(), you need HAVING.

Example: find customers with more than five orders.

-- Wrong (WHERE doesn’t see aggregated COUNT)

SELECT CustomerId, COUNT(*) AS OrderCount

FROM Orders

WHERE COUNT(*) > 5 -- ❌ invalid

GROUP BY CustomerId;

-- Correct (HAVING filters on aggregated result)

SELECT CustomerId, COUNT(*) AS OrderCount

FROM Orders

WHERE OrderDate >= '2025-01-01' AND OrderDate < '2026-01-01' -- ✅ Filter by year first

GROUP BY CustomerId

HAVING COUNT(*) > 5;Best practice:

- Use

WHEREfirst to reduce the data volume before grouping, it’s faster. - Only use

HAVINGfor aggregate conditions. - Some databases (e.g., PostgreSQL) may internally optimize

HAVINGwithout aggregates into aWHERE.

In EF Core, this maps to:

var result = db.Orders

.Where(o => o.OrderDate.Year == 2025)

.GroupBy(o => o.CustomerId)

.Where(g => g.Count() > 5)

.Select(g => new { CustomerId = g.Key, OrderCount = g.Count() });EF translates the second Where after the GroupBy into a SQL HAVING clause.

What .NET engineers should know:

- 👼 Junior: Know

WHEREfilters rows before grouping,HAVINGfilters after aggregation. - 🎓 Middle: Understand query execution order (

FROM → WHERE → GROUP BY → HAVING → SELECT) and filter early for performance. - 👑 Senior: Optimize aggregates and grouping operations, ensure correct index usage, and review generated SQL from LINQ group queries to avoid performance pitfalls.

📚 Resources:

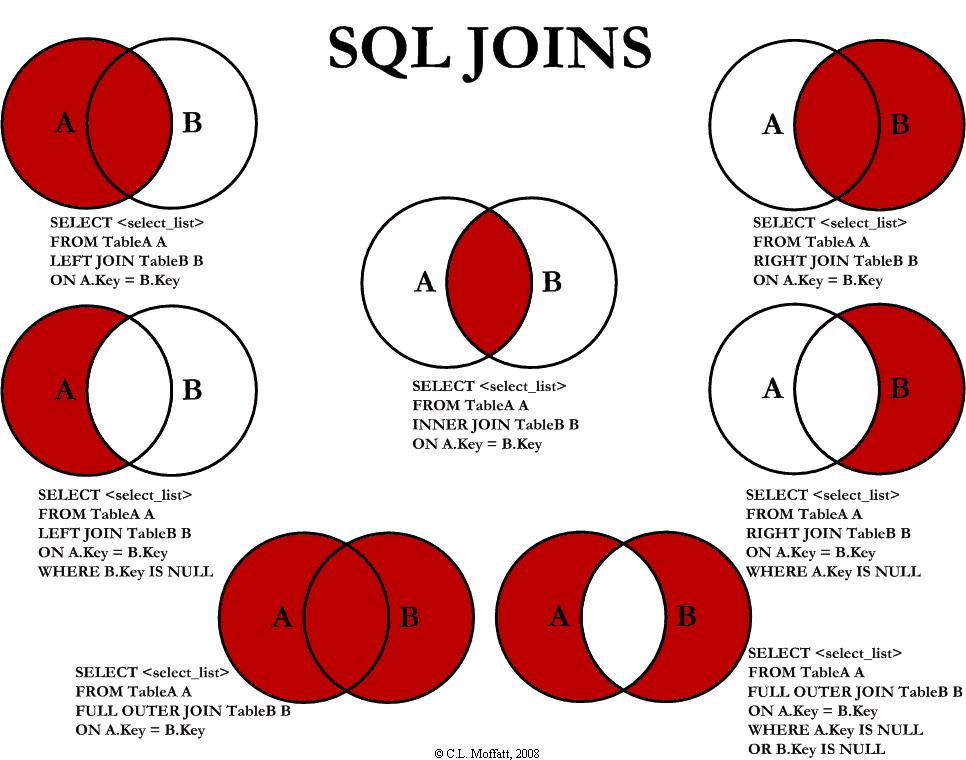

❓ What's the difference between INNER JOIN, LEFT JOIN, RIGHT JOIN, and FULL OUTER JOIN?

Joins define how rows from two tables are combined. They determine which rows are included when there’s no matching data between the tables.

- INNER JOIN — returns only matching rows from both tables.

- LEFT JOIN — returns all rows from the left table and matching rows from the right; unmatched ones show

NULLon the right side. - RIGHT JOIN — the opposite of LEFT JOIN; all rows from the right, plus matching rows from the left.

- FULL OUTER JOIN — returns all rows from both tables, filling

NULLfor missing matches.

Example:

-- Tables: Users and Orders

-- INNER JOIN: only users who have orders

SELECT u.UserName, o.OrderDate

FROM Users u

INNER JOIN Orders o ON u.UserId = o.UserId;

-- LEFT JOIN: all users, even if no orders

SELECT u.UserName, o.OrderDate

FROM Users u

LEFT JOIN Orders o ON u.UserId = o.UserId;

-- RIGHT JOIN: all orders, even if user missing

SELECT u.UserName, o.OrderDate

FROM Users u

RIGHT JOIN Orders o ON u.UserId = o.UserId;

-- FULL OUTER JOIN: everything from both tables

SELECT u.UserName, o.OrderDate

FROM Users u

FULL OUTER JOIN Orders o ON u.UserId = o.UserId;💡 Best practices:

- Prefer

LEFT JOINinstead ofRIGHT JOIN— It’s clearer and more widely supported. - Use explicit

JOINsyntax (not comma joins). - Always filter joined data explicitly to avoid Cartesian products (huge cross-multiplication of rows).

In EF Core:

// INNER JOIN

var result = from u in db.Users

join o in db.Orders on u.UserId equals o.UserId

select new { u.UserName, o.OrderDate };

// LEFT JOIN

var leftJoin = from u in db.Users

join o in db.Orders on u.UserId equals o.UserId into userOrders

from o in userOrders.DefaultIfEmpty()

select new { u.UserName, o?.OrderDate };EF Core automatically translates LINQ joins to SQL INNER JOIN or LEFT JOIN.

What .NET engineers should know:

- 👼 Junior: Know INNER JOIN shows only matching rows, LEFT/RIGHT keep all rows from one side.

- 🎓 Middle: Understand when to use each join, and how NULL values appear in results.

- 👑 Senior: Optimize joins on large datasets (indexes, query plans), avoid Cartesian products, and design schemas that minimize unnecessary joins.

📚 Resources:

❓ Let's say you have a table for Posts and Comments. How would you model the database to retrieve a post along with all its associated comments efficiently?

The classic way is a one-to-many relationship:

- A

Poststable with a primary key (PostId). - A

Commentstable with a foreign key (PostId) pointing toPosts.

Schema:

CREATE TABLE Posts (

PostId INT PRIMARY KEY,

Title NVARCHAR(200),

Content NVARCHAR(MAX),

CreatedAt DATETIME2

);

CREATE TABLE Comments (

CommentId INT PRIMARY KEY,

PostId INT NOT NULL,

Author NVARCHAR(100),

Text NVARCHAR(MAX),

CreatedAt DATETIME2,

FOREIGN KEY (PostId) REFERENCES Posts(PostId)

);Query to fetch a post with its comments:

SELECT p.PostId, p.Title, p.Content, c.CommentId, c.Author, c.Text, c.CreatedAt

FROM Posts p

LEFT JOIN Comments c ON p.PostId = c.PostId

WHERE p.PostId = @postId

ORDER BY c.CreatedAt;Efficiency considerations:

- Index

Comments.PostIdfor fast lookups. - Use pagination if a post can have thousands of comments (

OFFSET ... FETCH). - In ORMs like EF Core, you can use eager loading:

// Eager loading (loads post + all comments)

var post = await db.Posts

.Include(p => p.Comments)

.FirstOrDefaultAsync(p => p.PostId == id);

// Lazy loading alternative (loads comments only when accessed)

db.ChangeTracker.LazyLoadingEnabled = true;

var post = await db.Posts.FindAsync(id);

var comments = post.Comments; // triggers lazy loadWhat .NET engineers should know:

- 👼 Junior: Know a post has many comments, linked by a foreign key.

- 🎓 Middle: Know how to query one-to-many related items using

JOINor EF Core.Include(). Handle pagination for extensive collections. - 👑 Senior: Optimize read-heavy scenarios by using caching, denormalized read models, or projections; tune EF loading strategies (eager, lazy, explicit) for scalability.

❓ How would you model a "self-referencing" relationship, like an employee-manager hierarchy, in a SQL table?

A self-referencing relationship means a table’s rows relate to other rows in the same table.

In an employee–manager hierarchy, both employees and managers are stored in a single table — a manager is simply another employee.

Table design

You add a column that references the same table’s primary key.

CREATE TABLE Employees (

EmployeeId INT PRIMARY KEY,

Name NVARCHAR(100) NOT NULL,

ManagerId INT NULL,

FOREIGN KEY (ManagerId) REFERENCES Employees(EmployeeId)

);EmployeeId- uniquely identifies each employee.ManagerId- points to another employee (their manager).- Top-level managers (like CEOs) have

NULLasManagerId.

Example data:

| EmployeeId | Name | ManagerId |

|---|---|---|

| 1 | Alice (CEO) | NULL |

| 2 | Bob | 1 |

| 3 | Carol | 2 |

| 4 | Dave | 2 |

This creates a simple hierarchy: Alice → Bob → Carol/Dave

Querying the hierarchy

Find direct reports:

SELECT * FROM Emloyees WHERE ManagerId = 2; -- Bob’s teamFind employee + manager name (self-join):

SELECT e.Name AS Employee, m.Name AS Manager

FROM Employees e

LEFT JOIN Employees m ON e.ManagerId = m.EmployeeId;Find complete hierarchy (recursive CTE):

WITH OrgChart AS (

SELECT EmployeeId, Name, ManagerId, 0 AS Level

FROM Employees

WHERE ManagerId IS NULL

UNION ALL

SELECT e.EmployeeId, e.Name, e.ManagerId, Level + 1

FROM Employees e

JOIN OrgChart o ON e.ManagerId = o.EmployeeId

)

SELECT * FROM OrgChart;Design considerations

- Add indexes on ManagerId for faster lookups.

- Use recursive CTEs for reporting and hierarchy queries.

- Prevent circular references (e.g., an employee managing themselves) with constraints or triggers.

What .NET engineers should know:

- 👼 Junior: Know

ManagerIdreferencesEmployeeIdin the same table. - 🎓 Middle: Understand how to query hierarchies using self-joins or recursive CTEs.

- 👑 Senior: Design for large org charts to use indexes, detect cycles, and consider hierarchyid (SQL Server) or closure tables for complex trees.

📚 Resources:

❓ What’s the difference between a Primary Key, Unique Key, and Foreign Key?

These three types of constraints define how data relate to and remain consistent in a relational database.

| Key Type | Purpose | Allows NULLs | Can have duplicates? | Example Use |

|---|---|---|---|---|

| Primary Key | Uniquely identifies each row in a table. | No | No | Id column in Users table |

| Unique Key | Ensures all values in a column (or combination) are unique. | Yes (one in SQL Server, multiple in PostgreSQL/MySQL) | No | Email in Users table |

| Foreign Key | Links a row to another table’s primary key to maintain referential integrity. | Yes | Yes | UserId in Orders table referencing Users.Id |

Example:

CREATE TABLE Users (

Id INT PRIMARY KEY,

Email NVARCHAR(100) UNIQUE,

Name NVARCHAR(100)

);

CREATE TABLE Orders (

Id INT PRIMARY KEY,

UserId INT,

FOREIGN KEY (UserId) REFERENCES Users(Id)

);- The Primary Key (

Users.Id) uniquely identifies each user. - The Unique Key (

Email) ensures no two users share the same email. - The Foreign Key (

Orders.UserId) ensures each order belongs to an existing user.

Best practices:

- Define indexes on foreign keys to improve join performance.

- Use composite keys when a single column doesn’t uniquely identify a record (e.g.,

(OrderId, ProductId)). - Avoid natural keys such as email addresses or usernames for PKs to use surrogate keys (INT or GUID).

In EF Core:

public class User

{

[Key] public int Id { get; set; }

[Index(IsUnique = true)] public string Email { get; set; } = null!;

public ICollection<Order> Orders { get; set; } = new List<Order>();

}

public class Order

{

[Key] public int Id { get; set; }

[ForeignKey(nameof(User))] public int UserId { get; set; }

public User User { get; set; } = null!;

}What .NET engineers should know:

- 👼 Junior: Understand that a Primary Key uniquely identifies a record, a Unique Key prevents duplicates, and a Foreign Key links related tables.

- 🎓 Middle: Know how these constraints enforce integrity in SQL and how EF Core represents them with

[Key],[Index(IsUnique=true)], and[ForeignKey]. - 👑 Senior: Design schemas with composite keys, cascade behaviors, and indexed foreign keys. Balance normalization with query performance.

📚 Resources:

❓ How do foreign keys affect data integrity and performance?

A foreign key enforces a relationship between two tables. It ensures that the value in one table (the child) matches an existing value in another (the parent), thereby maintaining referential integrity. But this integrity comes with a performance cost: every insert, update, or delete must be validated by the database.

Example:

CREATE TABLE Orders (

Id INT PRIMARY KEY,

CustomerId INT,

FOREIGN KEY (CustomerId) REFERENCES Customers(Id)

);This guarantees that every CustomerId in Orders exists in Customers.

Impact on performance:

- Every

INSERT,UPDATE, orDELETEmust check the parent table: adding a small validation cost. - Foreign keys can improve query plans when indexed properly (faster joins).

- Use

ON DELETE CASCADEorON UPDATE CASCADEcarefully convenient, but can cause large chained deletions.

When high-throughput writes are required (e.g., ETL, event ingestion), you may temporarily disable constraints or defer them until batch completion, but only when the application guarantees consistency.

In EF Core, foreign keys are automatically created for navigation properties unless configured otherwise:

modelBuilder.Entity<Order>()

.HasOne(o => o.Customer)

.WithMany(c => c.Orders)

.HasForeignKey(o => o.CustomerId)

.OnDelete(DeleteBehavior.Restrict);This maps directly to a SQL foreign key constraint.

What .NET engineers should know:

- 👼 Junior: Understand that foreign keys ensure data consistency. Every child row must reference an existing parent.

- 🎓 Middle: Know foreign keys add validation overhead on writes, but help query optimization when indexed.

- 👑 Senior: Design trade-offs when to use cascading deletes, when to disable or defer constraints for bulk inserts, and how to manage integrity in distributed or event-driven systems.

❓ When would you use a junction table in a many-to-many relationship?

A junction table (or bridge table) links two tables in a many-to-many relationship. Each record in one table can relate to many in the other, and vice versa. The junction table breaks this into two one-to-many relationships.

Example:

CREATE TABLE Students (

Id INT PRIMARY KEY,

Name NVARCHAR(100)

);

CREATE TABLE Courses (

Id INT PRIMARY KEY,

Title NVARCHAR(100)

);

CREATE TABLE StudentCourses (

StudentId INT,

CourseId INT,

PRIMARY KEY (StudentId, CourseId),

FOREIGN KEY (StudentId) REFERENCES Students(Id),

FOREIGN KEY (CourseId) REFERENCES Courses(Id)

);Here, StudentCourses connects students and courses. A student can enroll in many classes, and each course can have many students.

If the link itself has attributes (e.g., EnrollmentDate, Grade), the junction table becomes a fully modeled entity rather than just a connector.

In EF Core:

Implicit many-to-many (no explicit join entity):

modelBuilder.Entity<Student>()

.HasMany(s => s.Courses)

.WithMany(c => c.Students);Explicit junction entity (when you need extra fields):

public class StudentCourse

{

public int StudentId { get; set; }

public int CourseId { get; set; }

public DateTime EnrolledOn { get; set; }

}What .NET engineers should know:

- 👼 Junior: Understand that a junction table connects two tables when many-to-many relationships are required.

- 🎓 Middle: Know how to define and query many-to-many relationships and avoid storing lists of IDs in a single column.

- 👑 Senior: Choose between implicit EF Core many-to-many mappings and explicit junction entities. Optimize for large datasets by using proper indexes and handling cascade rules carefully.

🔍 Querying

❓ How would you return all users and their last order date, even if some users have no orders

You’d join the Users table with the Orders table using a LEFT JOIN, so users without orders still appear.

To get the last order date, use MAX(order_date) and group by the user.

Example:

SELECT

u.UserId,

u.UserName,

MAX(o.OrderDate) AS LastOrderDate

FROM Users u

LEFT JOIN Orders o

ON u.UserId = o.UserId

GROUP BY u.UserId, u.UserName

ORDER BY LastOrderDate DESC;This ensures:

- Users with orders show their latest order date.

- Users with no orders still appear, but

LastOrderDatewill beNULL.

💡 Performance tip:

For large datasets, use a window function instead of grouping:

SELECT UserId, UserName, OrderDate

FROM (

SELECT

u.UserId, u.UserName, o.OrderDate,

ROW_NUMBER() OVER (PARTITION BY u.UserId ORDER BY o.OrderDate DESC) AS rn

FROM Users u

LEFT JOIN Orders o ON u.UserId = o.UserId

) AS ranked

WHERE rn = 1;

This avoids complete aggregation when you only need the most recent row per user.

What .NET engineers should know:

- 👼 Junior: Know how to join tables and why to use

LEFT JOINto keep all users. - 🎓 Middle: Understand grouping and aggregation (

MAX,GROUP BY), and how to handleNULLresults safely. - 👑 Senior: Optimize for scale use indexes on

UserIdandOrderDate, consider window functions for efficiency, and avoid unnecessary sorting in ORM queries.

📚 Resources:

❓ How does a subquery differ from a JOIN?

A JOIN combines data from multiple tables into one result set by linking rows that share a related key. A subquery runs a nested query first, then uses its result in the outer query often as a filter or computed value.

Both return similar results, but the JOIN is usually faster and more readable for multi-table queries.

Example (JOIN):

SELECT o.OrderId, u.UserName

FROM Orders o

JOIN Users u ON o.UserId = u.UserId;

Example (Subquery):

SELECT OrderId

FROM Orders

WHERE UserId IN (SELECT UserId FROM Users WHERE IsActive = 1);

When to use each:

- Use a JOIN when you need data from multiple tables displayed side by side.

- Use a subquery when you only need to reference another table’s value (like filtering or aggregation).

💡 Performance note: Modern SQL optimizers often rewrite subqueries as joins; however, correlated subqueries (those that reference the outer query) can be slower, as they may execute once per row. Prefer CTEs or JOINs for clarity and optimization hints in complex logic.

What .NET engineers should know:

- 👼 Junior: Understand that JOIN merges tables, while a subquery runs a query inside another query.

- 🎓 Middle: Know that JOINs are typically more efficient, but subqueries can simplify logic when you only need a single value or aggregate (like

MAX(),COUNT(), etc.). - 👑 Senior: Use subqueries carefully, they can hurt performance if executed per row. Replace with JOINs or CTEs whenever possible for clarity and to provide optimizer hints. Understand that some databases internally rewrite subqueries into joins.

📚 Resources: Joins SQL Server

❓ What is a Common Table Expression (CTE) and how does it differ from a temporary table?

A Common Table Expression (CTE) is a temporary, named result set defined within a query.

A temporary table is a physical object created in the temp database that can be reused within the same session.

Example scenario

We needed to find customers who placed multiple orders in the last 30 days and spent more than 10% above their average purchase value.

The original query had several nested subqueries and was hard to maintain, so it was refactored using a CTE:

CTE

WITH RecentOrders AS (

SELECT CustomerId, SUM(Amount) AS TotalSpent

FROM Orders

WHERE OrderDate >= DATEADD(DAY, -30, GETDATE())

GROUP BY CustomerId

),

AverageSpending AS (

SELECT CustomerId, AVG(Amount) AS AvgSpent

FROM Orders

GROUP BY CustomerId

)

SELECT r.CustomerId, r.TotalSpent, a.AvgSpent

FROM RecentOrders r

JOIN AverageSpending a ON r.CustomerId = a.CustomerId

WHERE r.TotalSpent > a.AvgSpent * 1.1;CTEs enhance readability by breaking down complex queries into logical components. They exist only during query execution.

Example with a temporary table:

SELECT * INTO #RecentOrders

FROM Orders WHERE OrderDate >= DATEADD(DAY, -30, GETDATE());

CREATE INDEX IX_RecentOrders_CustomerId ON #RecentOrders(CustomerId);Temporary tables persist for the duration of the session, can be indexed, and are helpful when the same data is reused multiple times.

When should you use a CTE vs. a temporary table?

| Technique | Use When | Advantages | Limitations |

|---|---|---|---|

| CTE | Query needs multiple logical steps or recursion | Improves readability; no cleanup needed | Re-evaluated on each reference |

| Temp Table | Data reused or needs indexing | Can persist and be optimized | Extra I/O and storage overhead |

CTEs can also be recursive, ideal for hierarchical data (e.g., employee trees, folder structures).

What .NET engineers should know:

- 👼 Junior: Know what CTEs and temp tables are and how they simplify complex queries.

- 🎓 Middle: Understand performance trade-offs, CTEs are inline, temp tables can be indexed and reused.

- 👑 Senior: Choose based on workload, use temp tables for large intermediate datasets and CTEs for clarity or recursion. In ORMs like EF Core, CTEs often appear in generated SQL for LINQ groupings or projections.

📚 Resources:

❓ What are window functions (ROW_NUMBER, RANK, DENSE_RANK, etc.), and where are they useful?

Window functions perform calculations across a set of rows related to the current row without collapsing them like GROUP BY does. They’re used for ranking, running totals, moving averages, and comparing rows within partitions.

Example:

SELECT

EmployeeId,

DepartmentId,

Salary,

ROW_NUMBER() OVER (PARTITION BY DepartmentId ORDER BY Salary DESC) AS RowNum,

RANK() OVER (PARTITION BY DepartmentId ORDER BY Salary DESC) AS RankNum,

DENSE_RANK() OVER (PARTITION BY DepartmentId ORDER BY Salary DESC) AS DenseRankNum

FROM Employees;ROW_NUMBER()gives unique sequential numbers.RANK()skips numbers when there are ties.DENSE_RANK()doesn’t skip numbers on relations.

Other common window functions:

SUM()/AVG()withOVER()running totals and moving averages.LAG()/LEAD()compare current and previous/next rows (e.g., change since last month).

💡 Performance tips:

- Index partition and order columns used in the window definition.

- Avoid sorting giant unindexed sets the

ORDER BYin a window function, as it can be expensive. - Prefer window functions over self-joins or correlated subqueries they’re more efficient and readable.

In EF Core, window functions can be written via raw SQL or newer LINQ support for RowNumber and pagination:

var employees = db.Employees

.OrderByDescending(e => e.Salary)

.Select((e, i) => new { e.Name, RowNumber = i + 1 })

.Take(10);

What .NET engineers should know:

- 👼 Junior: Know that window functions let you number or compare rows without grouping them.

- 🎓 Middle: Use them for pagination, top-N queries, or comparisons. Know the differences between

ROW_NUMBER,RANK, andDENSE_RANK. - 👑 Senior: Optimize partitioning and ordering; apply window functions to replace self-joins or nested queries for better performance. Ensure indexing aligns with partition keys on large datasets.

📚 Resources: Introduction to T-SQL Window Functions

❓ You have a query that needs to filter on a column that can contain NULL values. What are some pitfalls to avoid?

In SQL, NULL means unknown, not “empty,” which affects comparisons, filters, and even indexes. SQL uses three-valued logic: TRUE, FALSE, and UNKNOWN. That’s why queries involving NULL behave differently than expected.

1. Comparisons with NULL

Comparing with = or != never returns TRUE:

SELECT * FROM Users WHERE Email = NULL; -- ❌ Always returns 0 rows✅ Correct way:

SELECT * FROM Users WHERE Email IS NULL;

SELECT * FROM Users WHERE Email IS NOT NULL;2. Beware of NOT IN with NULLs

If the subquery or list contains a NULL, your entire comparison fails because of SQL’s three-valued logic.

-- Suppose one of these Ids is NULL

SELECT * FROM Users WHERE Id NOT IN (SELECT UserId FROM BannedUsers);

-- ❌ Returns 0 rows if any UserId is NULL✅ Use NOT EXISTS instead:

SELECT * FROM Users u

WHERE NOT EXISTS (

SELECT 1 FROM BannedUsers b WHERE b.UserId = u.Id

);3. Indexes and NULLs

- Most databases include

NULLs in indexes, but their handling can differ. - SQL Server and PostgreSQLinclude them by default; PostgreSQL also allows partial indexes:

Example (exclude NULLs from index):

CREATE INDEX IX_Users_Email_NotNull

ON Users(Email)

WHERE Email IS NOT NULL;4. Aggregations skip NULLs

- Functions like

COUNT(column)ignore NULLs. - If you want to count all rows, use

COUNT(*).

-- Counts only non-null emails

SELECT COUNT(Email) FROM Users;

-- Counts all users

SELECT COUNT(*) FROM Users;5. Handle NULLs explicitly

Use COALESCE or ISNULL to replace unknowns:

SELECT COALESCE(Phone, 'N/A') FROM Users;Note:

In C#, null is not the same as SQL NULL. When using ADO.NET or EF Core, DBNull.Value represents a SQL null, ensure proper conversion when reading or writing nullable columns.

What .NET engineers should know:

- 👼 Junior: Understand

NULLmeans “unknown,” not empty. UseIS NULLandIS NOT NULL. - 🎓 Middle: Know pitfalls like

NOT INwithNULLs, and that aggregations skip nulls. Learn how indexing treats null values. - 👑 Senior: Design schema and defaults to minimize

NULLissues. Handle conversions properly in code (DBNullvsnull), and ensure queries, constraints, and ORM mappings behave consistently.

📚 Resources:

❓ How do you decide between using a JOIN in the database versus handling the relationship in your application code?

Whether to JOIN in SQL or in application code depends on where it’s cheaper and cleaner to combine the data.

Use a JOIN in the database when:

- Tables have a clear relational link (

Orders → Customers). - The database can perform filtering, aggregation, or sorting more efficiently.

- You want to minimize round-trips between the app and DB.

- Data volumes are moderate and join operations are well-indexed.

Example (JOIN in DB is usually better):

SELECT o.OrderId, u.UserName

FROM Orders o

JOIN Users u ON o.UserId = u.UserId;Handle in application code when:

- Data comes from multiple sources (SQL, external APIs, caches, or services).

- Joins would require complex logic that cannot be expressed in SQL (e.g., custom business rules, ML scoring, etc.)

- You’re batching or caching data to improve performance across requests.

- You need loose coupling (e.g., in microservices, separate read models per service).

If Users were in SQL, but order details came from a third-party API, you’d fetch them separately in C# and merge in memory.

In EF Core:

var orders = db.Orders

.Include(o => o.User) // Executes a JOIN

.ToList();Use .Include() or .ThenInclude() when data lives in the same DB. If the data comes from another source (e.g., an API), load it separately and merge it in memory.

What .NET engineers should know:

- 👼 Junior: Understand that

JOINcombines related tables, while app-side joins merge data after retrieval. - 🎓 Middle: Know when DB joins are more efficient and when app-side composition makes sense (e.g., combining SQL + API results).

- 👑 Senior: Decide on a join strategy based on latency, data ownership, and scalability. Avoid chatty queries; favor server-side joins for local data and app joins for distributed systems.

📚 Resources:

❓ What is the difference between COUNT(*) and COUNT(column_name)?

COUNT(*) counts all rows in the result set — including those with NULL values. COUNT(column_name) counts only rows where the column is not NULL.

Example:

SELECT

COUNT(*) AS TotalUsers, -- Counts all rows

COUNT(Email) AS UsersWithEmail -- Counts only rows where Email IS NOT NULL

FROM Users;In most databases (SQL Server, PostgreSQL, MySQL),

COUNT(*)does not read all columns; it’s internally optimized to count rows from metadata or index statistics.COUNT(1)behaves identically toCOUNT(*); it’s a common myth that it’s faster.

In EF Core:

var total = await db.Users.CountAsync();

var withEmail = await db.Users.CountAsync(u => u.Email != null);

Both queries translate directly to the correct SQL form (COUNT(*) vs COUNT(column)).

What .NET engineers should know:

- 👼 Junior: Remember that

COUNT(*)counts every row, whileCOUNT(column)skipsNULLs. - 🎓 Middle: Use

COUNT(column)when checking for filled fields; understand thatCOUNT(1)is not faster thanCOUNT(*). - 👑 Senior: Know how COUNT is optimized by the DB engine (metadata or index scans) and ensure correct semantic intent when using ORM predicates (

!= null).

❓ How can you pivot or unpivot data in SQL?

Pivoting converts rows into columns, summarizing data (e.g., totals by quarter).

Unpivoting does the opposite, it converts columns into rows, useful for normalizing wide tables.

Example (Pivot):

SELECT *

FROM (

SELECT Year, Quarter, Revenue

FROM Sales

) AS Source

PIVOT (

SUM(Revenue) FOR Quarter IN ([Q1], [Q2], [Q3], [Q4])

) AS PivotTable;Example (Unpivot):

SELECT Year, Quarter, Revenue

FROM SalesPivoted

UNPIVOT (

Revenue FOR Quarter IN ([Q1], [Q2], [Q3], [Q4])

) AS Unpivoted;Alternative (Cross-platform):

Not all databases support PIVOT syntax (e.g., MySQL).

You can achieve the same result with conditional aggregation:

SELECT

Year,

SUM(CASE WHEN Quarter = 'Q1' THEN Revenue END) AS Q1,

SUM(CASE WHEN Quarter = 'Q2' THEN Revenue END) AS Q2

FROM Sales

GROUP BY Year;

💡 Performance note:

- Always aggregate before pivoting large datasets.

- Ensure the pivot key (e.g.,

Quarter) has limited distinct values. - Avoid pivoting extremely wide datasets in OLTP systems, better to handle such reshaping in ETL or reporting layers.

What .NET engineers should know:

- 👼 Junior: Know that PIVOT turns rows into columns for summaries; UNPIVOT does the reverse.

- 🎓 Middle: Understand PIVOT syntax and how to emulate it using

CASE+GROUP BYwhen native syntax isn’t supported - 👑 Senior: Understand that

PIVOTcan be replaced with conditional aggregation usingCASE+GROUP BYfor flexibility and performance. Always validate indexing and memory usage when transforming large datasets.

📚 Resources: Using PIVOT and UNPIVOT

❓ How do you find duplicates in a table?

To find duplicates, group rows by the columns that define “uniqueness” and filter with HAVING COUNT(*) > 1.

Example:

SELECT Email, COUNT(*) AS Count

FROM Users

GROUP BY Email

HAVING COUNT(*) > 1;What .NET engineers should know:

- 👼 Junior: Learn to use

GROUP BYwithHAVING COUNT(*) > 1to detect duplicate values. - 🎓 Middle: Can join this result back to the original table to inspect complete duplicate rows or use

ROW_NUMBER()to keep only one instance and remove others. - 👑 Senior: Understand root causes (missing constraints, concurrent inserts, ETL logic). Prevent duplicates via unique indexes, transactions, and data validation, not just cleanup queries.

❓ What’s the difference between UNION and UNION ALL?

UNIONcombines results from multiple queries and removes duplicates.UNION ALLalso combines results but keeps all rows, including duplicates — it’s faster because it skips the distinct check.

In EF Core:

var query = db.Customers

.Select(c => new { c.Name, c.Email })

.Union(db.Subscribers.Select(s => new { s.Name, s.Email })); // UNION by default

var all = db.Customers

.Select(c => new { c.Name, c.Email })

.Concat(db.Subscribers.Select(s => new { s.Name, s.Email })); // UNION ALL equivalent

In LINQ, Union() performs DISTINCT behavior, while Concat() keeps duplicates.

What .NET engineers should know:

- 👼 Junior: Remember —

UNIONremoves duplicates,UNION ALLdoesn’t. - 🎓 Middle: Know

UNIONadds sorting overhead; preferUNION ALLfor large or non-overlapping datasets. - 👑 Senior: Understand that

UNIONrequires a sort or hash operation to eliminate duplicates. On large datasets, this can be costly — chooseUNION ALLwith downstream deduplication if performance matters more than strict uniqueness.

📚 Resources: Set Operators - UNION

📚 Indexing and Query Optimization

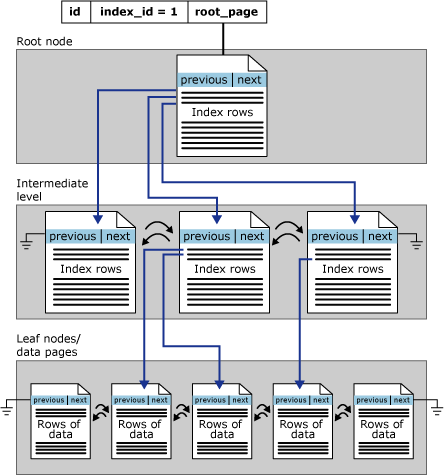

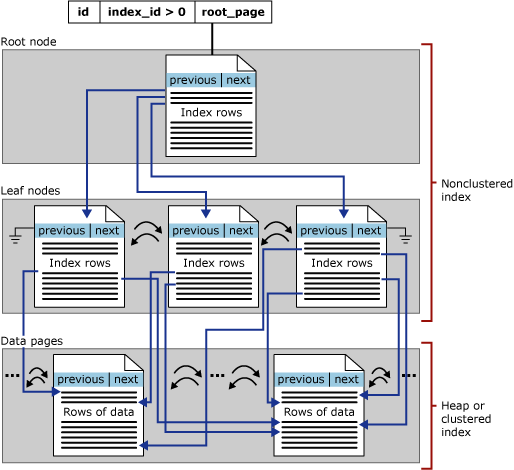

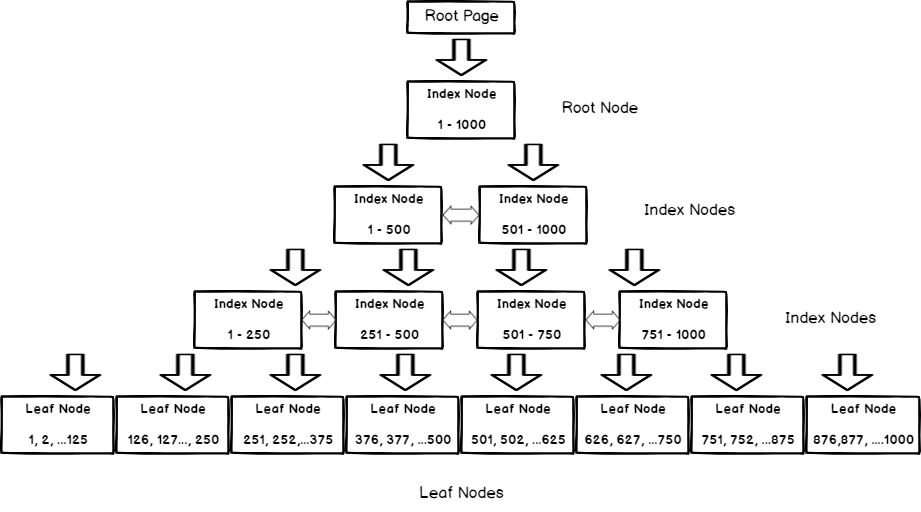

❓ What’s the difference between a clustered and a non-clustered index?

- A clustered index defines the physical order of data in a table — the table’s rows are stored directly in that index.

- A non-clustered index is a separate structure that stores key values and pointers (row locators) to the actual data.

Key difference between Clustered and non-clustered index:

| Feature | Clustered Index | Non-Clustered Index |

|---|---|---|

| Physical data order | Yes | No |

| Number per table | 1 | Many |

| Storage | Data pages are the index | Separate structure |

| Best for | Range scans, sorting | Targeted lookups, filters |

| Lookup cost | Direct (data is in index) | Requires key lookup to table |

Clustered index

- A table can have only one clustered index.

- The data rows themselves are stored in that order.

- The clustered index key is automatically part of every non-clustered index.

Example:

CREATE CLUSTERED INDEX IX_Orders_OrderId

ON Orders(OrderId);This makes the table physically sorted by OrderId.

When to use: Primary key or frequently range-filtered column (e.g., OrderDate, Id).

Non-clustered index

- Doesn’t change the data order, it’s a lookup table pointing to rows.

- You can have many non-clustered indexes.

- Useful for search-heavy queries on non-key columns.

Example:

CREATE NONCLUSTERED INDEX IX_Orders_CustomerId

ON Orders(CustomerId);💡 Tip:

- Every table should have a clustered index, usually on the primary key.

- Add non-clustered indexes for frequent filters, joins, or sorts.

- Keep indexes lean; too many slow down inserts/updates.

What .NET engineers should know:

- 👼 Junior: Know clustered = main data order, non-clustered = secondary index.

- 🎓 Middle: Understand performance trade-offs and how SQL uses indexes for lookups and sorting.

- 👑 Senior: Design index strategies based on workload; balance reads vs writes, monitor fragmentation, and tune composite or covering indexes based on query patterns.

📚 Resources: Clustered and nonclustered indexes

❓ Can you explain what a composite index is and why the order of columns in it matters?

A composite index (or multi-column index) is built on two or more columns of a table.

It helps speed up queries that filter or sort by the same column combinations.

Example

CREATE INDEX IX_Orders_CustomerId_OrderDate

ON Orders(CustomerId, OrderDate);This index works efficiently for:

-- Uses both columns in the index

SELECT * FROM Orders

WHERE CustomerId = 42 AND OrderDate > '2025-01-01';…but not this one:

-- Only filters by OrderDate won't use the composite index efficiently

SELECT * FROM Orders

WHERE OrderDate > '2025-01-01';That’s because the index is sorted first by CustomerId, then by OrderDate. The column order defines which filters can use the index efficiently. The database can use the index for the leftmost prefix of the defined order.

💡 Best practices:

- Put the most selective column first, the one that filters out the most rows.

- Match column order to your most frequent query patterns.

- Avoid redundant indexes

(A, B)already covers(A)in most databases. - Use INCLUDE columns (SQL Server) for extra fields used in

SELECTto create a covering index.

In EF Core, the same logic applies queries must align with index column order:

// Uses both parts of the composite index

var orders = await db.Orders

.Where(o => o.CustomerId == 42 &amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;&amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp;amp; o.OrderDate > new DateTime(2025, 1, 1))

.ToListAsync();If you filter only by OrderDate, the database may perform an index scan instead of a seek.

What .NET engineers should know:

- 👼 Junior: Know that composite indexes combine multiple columns for faster lookups.

- 🎓 Middle: Understand that column order affects which queries can use the index.

- 👑 Senior: Design composite indexes based on query selectivity and workload patterns; avoid redundant indexes and use covering or filtered variants for critical queries.

📚 Resources:

❓ What are the different types of indexes available in SQL databases?

Indexes come in several flavors, each optimized for a different kind of query or storage strategy. Think of them like various kinds of maps; each one helps you find data faster, but in its own way.

1. Clustered Index

- Defines the physical order of table data.

Each table can have only one clustered index; the table is the index.

✅ Best for range queries, sorting, or primary key lookups.

CREATE CLUSTERED INDEX IX_Orders_OrderId ON Orders(OrderId);2. Non-Clustered Index

- Separate the structure from the table.

- Points to data rows (like a book index).

- You can have many per table.

- Supports quick lookups for frequently filtered columns.

✅ Great for frequent filters and lookups on non-key columns.

CREATE NONCLUSTERED INDEX IX_Orders_CustomerId ON Orders(CustomerId);3. Composite (Multicolumn) Index

Combines multiple columns into one index.

- ✅ Useful when queries filter or sort by a combination of columns.

- ⚠️ Column order matters —

(A, B)≠(B, A).

CREATE INDEX IX_Orders_CustomerId_OrderDate ON Orders(CustomerId, OrderDate);4. Covering Index (with Included Columns)

Includes all columns needed by a query so that the database can serve results without table lookups.

- ✅ Improves read performance, especially for frequent, read-heavy queries.

- ⚠️ Increases index size and slows writes.

CREATE NONCLUSTERED INDEX IX_Orders_CustomerId

ON Orders(CustomerId)

INCLUDE (OrderDate, Total);5. Unique Index

Ensures all indexed values are distinct often created automatically by PRIMARY KEY or UNIQUE constraints.

CREATE UNIQUE INDEX IX_Users_Email ON Users(Email);6. Filtered / Partial Index

Indexes only a subset of rows based on a condition.

- ✅ Saves space and speeds up targeted queries.

- ⚠️ Limited to databases that support it (e.g., SQL Server, PostgreSQL).

CREATE UNIQUE INDEX IX_Subscriptions_Active

ON Subscriptions(UserId)

WHERE IsActive = 1;7. Full-Text Index

Specialized for searching inside long text fields or documents. Supports CONTAINS / FREETEXT queries.

CREATE FULLTEXT INDEX ON Articles(Title, Body)

KEY INDEX PK_Articles;8. Spatial Index

Optimized for geolocation or geometric data (e.g., points, polygons).

Enables queries like “find locations within 10 km.”

CREATE SPATIAL INDEX SIDX_Locations_Geo ON Locations(Geo);9. Columnstore Index

Stores data in a columnar format rather than in rows.

Ideal for analytics and aggregation-heavy workloads (OLAP).

✅ Massive compression, optimized for SUM, COUNT, and AVG queries.

⚠️ Slower for frequent single-row lookups.

CREATE CLUSTERED COLUMNSTORE INDEX IX_Sales_ColumnStore ON Sales;

10. Hash Index (Memory-Optimized Tables)

Used in in-memory database tables for constant-time lookups.

Available in SQL Server (In-Memory OLTP) and PostgreSQL hash indexes.

Summary

| Type | Stores Data | Unique | Best For | Trade-off |

|---|---|---|---|---|

| Clustered | Yes | Yes | Range scans, sorting | Only one per table |

| Non-Clustered | No | Optional | Filters and lookups | Extra lookups |

| Composite | No | Optional | Multi-column filters | Column order matters |

| Covering | No | Optional | Read-heavy queries | Larger index size |

| Unique | No | Yes | Data integrity | None |

| Filtered | No | Optional | Partial data sets | Limited support |

| Full-Text | No | Optional | Text search | Storage-heavy |

| Spatial | No | Optional | Geo queries | Complex setup |

| Columnstore | Columnar | Optional | Analytics workloads | Slow single-row ops |

EF Core

Doesn’t automatically create indexes, except for primary and foreign keys. You can define custom ones using the Fluent API:

modelBuilder.Entity<Order>()

.HasIndex(o => new { o.CustomerId, o.OrderDate })

.HasDatabaseName("IX_Orders_CustomerId_OrderDate");

What .NET engineers should know:

- 👼 Junior: Know basic index types (clustered, non-clustered, unique).

- 🎓 Middle: Understand composite, covering, and filtered indexes and their trade-offs.

- 👑 Senior: Design an overall indexing strategy, analyze query workloads, tune for selectivity, monitor index usage, and remove redundant or overlapping indexes.

📚 Resources: Index architecture and design guide

❓ How would you debug a slow query, and what tools would you use?

When a query is slow, start by verifying where the delay is coming from: app logic, the ORM, or the database itself.

Once it’s confirmed as a SQL issue, follow these steps:

1. Confirm the bottleneck

Before diving into SQL:

- Log timings at the app level (for example, EF Core logs SQL durations).

- Check whether the network, the ORM-generated SQL, or the database itself is causing the slowness.

Once you’re sure the query is the issue, it's time to go deeper.

2. Capture slow queries

| Database | Built-in Tools |

|---|---|

| SQL Server | Query Store, SQL Profiler, Extended Events |

| PostgreSQL | pg_stat_statements, log_min_duration_statement |

| MySQL / MariaDB | Slow Query Log, performance_schema, SHOW PROFILES |

| Oracle | Automatic Workload Repository (AWR) |

| SQLite | EXPLAIN QUERY PLAN |

3. Reproduce and measure

Run the query manually in your SQL tool (SSMS, pgAdmin, MySQL Workbench, etc.)

Note execution time, result size, and resource usage.

4. Check the execution plan

| Database | How to see plan | Example |

|---|---|---|

| SQL Server | Ctrl+M in SSMS > Include Actual Execution Plan | Identifies index scans, key lookups, bad joins. |

| PostgreSQL | EXPLAIN (ANALYZE, BUFFERS) | Shows real runtime and I/O cost. |

| MySQL | EXPLAIN ANALYZE (MySQL 8+) | Displays cost, row estimates, and actual timings. |

Look for:

- Table scans instead of index seeks.

- Large join loops or missing indexes.

- Misestimated row counts (outdated statistics).

5. Optimize

Common fixes:

- Add or tune indexes.

- Simplify joins and filters.

- Use selective

WHEREconditions. - Update statistics and ensure query parameters don’t trigger 'bad' plans (parameter sniffing).

- In MySQL/PostgreSQL, check buffer pool or work_mem settings for memory limits.

💡 In .NET / EF Core:

Use simple query logging or diagnostic interceptors:

optionsBuilder.LogTo(Console.WriteLine, LogLevel.Information);

You can also tag queries for easy tracking:

var query = db.Orders.TagWith("Top slow query: Orders Dashboard")

.Where(o => o.Status == "Pending");

What .NET engineers should know:

- 👼 Junior: Be able to identify slow queries via logs and EF Core timing; understand that database design affects performance.

- 🎓 Middle: Read execution plans, detect missing indexes or bad joins, and fix obvious inefficiencies.

- 👑 Senior: Diagnose advanced issues: parameter sniffing, plan cache reuse, or I/O bottlenecks; know when to recompile, refactor, or redesign data models.

📚 Resources:

❓ What is parameter sniffing, and how can it cause performance issues?

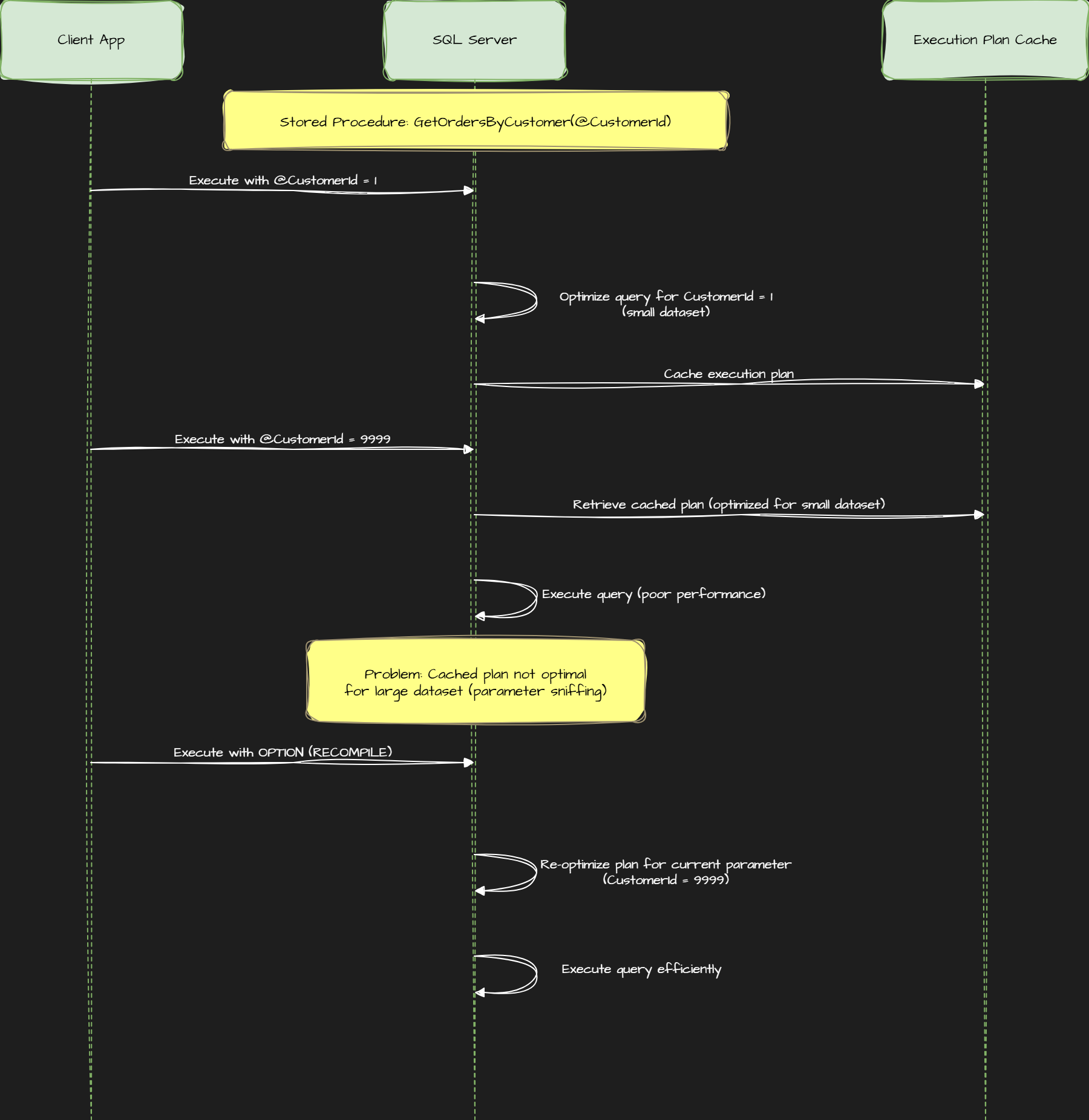

Parameter sniffing occurs when the database engine caches an execution plan for a query or stored procedure based on the first parameter values it receives and then reuses that plan for all subsequent executions, even when parameter values vary drastically.

Example of the problem

Let’s say you have a stored procedure:

CREATE PROCEDURE GetOrdersByCustomer

@CustomerId INT

AS

BEGIN

SELECT *

FROM Orders

WHERE CustomerId = @CustomerId;

END;- The first call might be for a small customer (10 orders).

- SQL builds a plan optimized for a few rows, likely using an Index Seek.

- The next call might be for a large customer (100,000 orders).

- The same cached plan runs, causing slow performance, perhaps a Nested Loop Join instead of a Hash Join.

That’s parameter sniffing a plan optimized for one scenario reused where it doesn’t fit.

🔍 Why it happens

SQL Server and other databases cache query plans to save CPU time.

But if the data distribution is skewed (some customers have 10 rows, others 100k), the “sniffed” parameter can lead to inefficient plans for future calls.

✅ How to fix it

- If performance depends heavily on parameter values:

OPTIMIZE FOR UNKNOWNorRECOMPILE. - For predictable parameters, keep sniffing, as caching can improve overall performance.

Option 1 — Use local variables

This prevents the optimizer from “sniffing” the parameter value.

DECLARE @cid INT = @CustomerId;

SELECT * FROM Orders WHERE CustomerId = @cid;

Downside: the plan might not be fully optimized for any specific parameter, but it avoids extremes

Option 2 — Use OPTIMIZE FOR UNKNOWN

SELECT * FROM Orders

WHERE CustomerId = @CustomerId

OPTION (OPTIMIZE FOR UNKNOWN);Tell SQL Server to ignore the first parameter value and use general statistics instead.

Option 3 — Use RECOMPILE hint

SELECT * FROM Orders

WHERE CustomerId = @CustomerId

OPTION (RECOMPILE);Builds a new plan for every execution — always optimal but more CPU-heavy.

✅ Always optimal for current parameters

⚠️ More CPU overhead — best for procedures run infrequently or with unpredictable data.

Option 4 — Manually clear or rebuild plans

- Clear cached plan:

DBCC FREEPROCCACHE;(use sparingly). - Rebuild statistics if they’re stale or skewed.

What .NET engineers should know:

- 👼 Junior: Know that stored procs can behave differently for different parameters.

- 🎓 Middle: Understand that cached query plans cause parameter sniffing; know how to fix it with

RECOMPILEorOPTIMIZE FOR UNKNOWN. - 👑 Senior: Diagnose sniffing with execution plans,

sp_BlitzCache, or Query Store; design queries and statistics to minimize skew effects in high-load systems.

📚 Resources:

❓ How does indexing improve read performance but slow down writes?

Indexes speed up reads, but each index adds maintenance work to writes.

When you INSERT, UPDATE, or DELETE a row:

- The table’s data is modified and

- Every related index must also be updated or rebalanced.

This extra work increases CPU, I/O, and sometimes lock contention.

Example:

CREATE INDEX IX_Users_Email ON Users(Email);Now queries are filtering by Email run much faster—but inserting or changing an email takes slightly longer because because SQL must also insert into the index tree.

💡 Best practices:

- Create indexes only for columns used in

WHERE,JOIN, orORDER BY. - Avoid “just-in-case” indexes.

- Drop unused or duplicate indexes — check system views like:

SELECT * FROM sys.dm_db_index_usage_stats;

- Rebuild or reorganize fragmented indexes periodically.

- Use filtered, covering, or composite indexes for precision instead of many single-column ones.

What .NET engineers should know:

- 👼 Junior: Indexes make reads faster but slow down writes because the database must keep them in sync.

- 🎓 Middle: Know how to design indexes for query patterns (

WHERE,JOIN,ORDER BY) and avoid over-indexing. - 👑 Senior: Balance read vs write workloads; minimize index count in high-write systems, use filtered or composite indexes, monitor fragmentation, and index maintenance schedules.

📚 Resources:

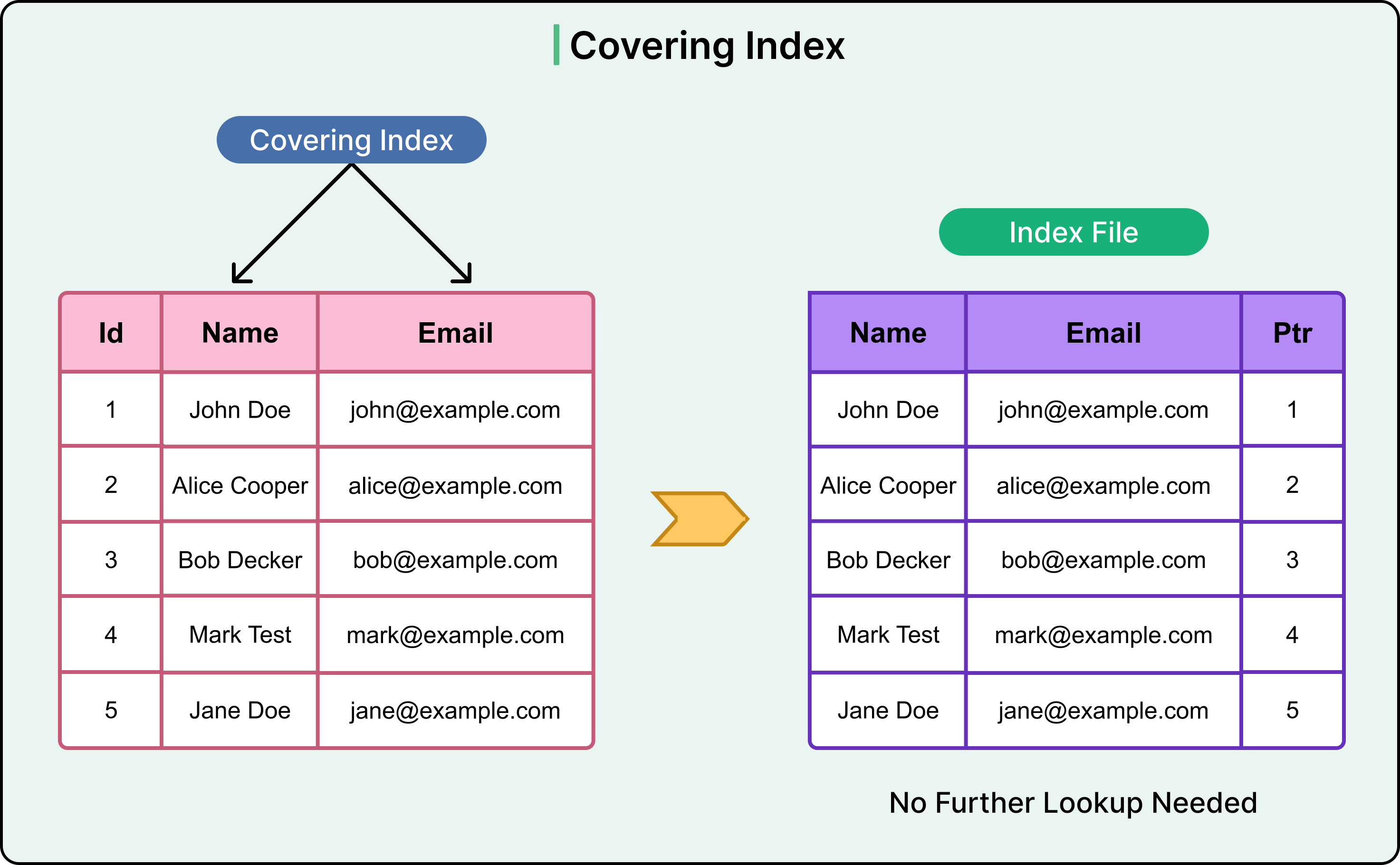

❓ Could you explain what a covering index is and how it can enhance performance?

A covering index (also called a covering or cover index) is an index that contains all the columns needed to satisfy a query, both for filtering and for returning data.

Because everything is already in the index, the database doesn’t have to go back to the main table (called a bookmark lookup or heap lookup) to fetch missing columns.

How it helps

- Fewer reads: The database doesn’t need to jump between the index and the table.

- Less I/O: Data is fetched from smaller index pages instead of whole table pages.

- Better caching: Indexes are often smaller and more likely to stay in memory.

However:

- Covering indexes take up more storage.

- They can slow down inserts and updates (because more data needs to be maintained).

- They should be used strategically for frequently executed, read-heavy queries.

Example:

-- Suppose you often run this query:

SELECT FirstName, LastName

FROM Employees

WHERE DepartmentId = 5;

-- A normal index:

CREATE INDEX IX_Employees_DepartmentId ON Employees(DepartmentId);

-- A covering index:

CREATE INDEX IX_Employees_DepartmentId_Covering

ON Employees(DepartmentId)

INCLUDE (FirstName, LastName);In SQL Server, the INCLUDE clause adds extra columns to the index that aren’t part of the search key but can be returned directly.

In PostgreSQL or MySQL, the same concept applies the index can “cover” the query if all selected columns are in it, even without an explicit INCLUDE clause.

What .NET engineers should know:

- 👼 Junior: Understand that a covering index can make a query faster by including all the needed columns, so the engine doesn’t read the main table.

- 🎓 Middle: Know how to identify queries that benefit from covering indexes and how to use the

INCLUDEclause in SQL Server. Be aware of the trade-off between storage and slower writes. - 👑 Senior: Design indexes based on query patterns, use

INCLUDEefficiently, and read execution plans to confirm the index truly covers the query. Should know how this works in different engines SQL Server (INCLUDE), PostgreSQL (multi-column indexes), MySQL (InnoDB clustered indexes).

📚 Resources:

❓ What are the best practices for indexing large tables?

Indexing large tables is all about striking a balance, speeding up queries without compromising inserts, updates, and storage. Done right, indexes make reads lightning-fast; done wrong, they can cripple write performance and bloat your database.

Here’s how to handle them effectively:

1. Index what you query — not everything

Every index has a cost. Each write (insert, update, delete) must also update all related indexes.

✅ Index columns used in:

WHERE,JOIN,ORDER BY, orGROUP BY- Frequent lookups or range filters

⚠️ Avoid “just in case” indexes; they add cost but no benefit.

2. Use composite indexes for common filters

If queries often combine multiple conditions, build composite indexes matching those patterns.

CREATE INDEX IX_Orders_CustomerId_Date

ON Orders (CustomerId, OrderDate DESC);✅ Works efficiently for queries like:

WHERE CustomerId = 42 AND OrderDate > '2025-01-01'

3. Keep indexes lean

The larger the index, the more memory it consumes.

- Avoid broad indexes (more than 3–4 key columns).

- Exclude large text or blob fields.

- Use

INCLUDEfor non-key columns instead of adding them all to the key.

CREATE INDEX IX_Orders_Status

ON Orders (Status)

INCLUDE (OrderDate, Total);4. Maintain and rebuild regularly

Large tables suffer from index fragmentation due to insert and delete operations.

✅ Schedule maintenance:

ALTER INDEX ALL ON Orders REBUILD WITH (ONLINE = ON);

or use REORGANIZE for lighter defragmentation.

💡 For massive databases, use incremental or partition-level rebuilds.

5. Monitor index usage

Remove unused indexes and tune underused ones.

In SQL Server:

SELECT * FROM sys.dm_db_index_usage_stats;In PostgreSQL:

SELECT * FROM pg_stat_user_indexes;Check for:

- Unused indexes - Drop them.

- Missing indexes: add them where scans dominate.

6. Consider partitioning

For massive datasets, partition tables and indexes by date or range. This reduces scan size and allows parallel operations.

CREATE PARTITION SCHEME psOrderRange AS PARTITION pfOrderRange

TO ([PRIMARY], [FG2025]);

7. Evaluate specialized indexes

- Use filtered or partial indexes to target subsets of data.

- Use columnstore indexes for analytical (OLAP) queries.

- Use hash indexes or memory-optimized tables for hot data in in-memory systems.

What .NET engineers should know:

- 👼 Junior: Understand that indexes make queries faster but slow down writes; add them only where needed.

- 🎓 Middle: Know how to design and maintain indexes, use composites, INCLUDE, and monitor fragmentation or usage.

- 👑 Senior: Architect index strategies for high-scale systems, balance read/write ratio, schedule maintenance, partition large tables, and apply columnstore or filtered indexes for workload-specific optimization.

📚 Resources:

❓ How do database statistics affect query performance?

Statistics tell the query optimizer how data is distributed in a table — things like row counts, distinct values, and data ranges.

The optimizer uses them to select the most efficient execution plan. Outdated or missing statistics can lead to poor choices, such as full table scans instead of index seeks.

-- Manually update statistics

UPDATE STATISTICS Orders WITH FULLSCAN;Keeping stats fresh helps the optimizer make accurate cost estimates and avoid evil query plans.

💡 Best practices:

- Keep auto-update statistics enabled (the default in SQL Server, PostgreSQL, and MySQL).

- Trigger manual updates for significant changes (>20% of rows modified):

UPDATE STATISTICS Orders;- For massive datasets, use:

- FULLSCAN for precision (expensive, but accurate).

- INCREMENTAL STATS on partitioned tables.

- AUTO CREATE STATISTICS for dynamic columns in SQL Server.

- Rebuild indexes periodically; it updates statistics automatically.

What .NET engineers should know:

- 👼 Junior: Know that statistics guide how the DB decides to use indexes; stale stats can cause full scans.

- 🎓 Middle: Understand auto-update thresholds and when to manually refresh statistics for large tables.

- 👑 Senior: Diagnose bad query plans caused by skewed or outdated stats; compare before/after execution plans, use FULLSCAN or incremental stats for partitioned data, and monitor the cardinality estimator behavior.

📚 Resources:

❓ What is the purpose of the NOLOCK hint in SQL Server, and what are the serious risks of using it?

The NOLOCK table hint tells SQL Server to read data without acquiring shared locks, meaning it doesn’t wait for ongoing transactions.

It’s equivalent to running the query under the READ UNCOMMITTED isolation level.

Example:

-- Typical usage

SELECT * FROM Orders WITH (NOLOCK);This can make queries appear faster, because they don’t block or get blocked.

However, the trade-off is significant: the query may read uncommitted, inconsistent, or even corrupted data.

⚠️ Risks of using NOLOCK

- Dirty reads. You might see rows that were inserted or updated but later rolled back.

- Missing or duplicated rows. Page splits, or concurrent updates, can cause the same row to appear twice or not at all.

- Phantom reads. Data might change mid-scan, so aggregates like

SUM()orCOUNT()are unreliable. - Corrupted results during page splits. SQL Server might read half an old page and half a new one, returning nonsense values.

✅ Safer alternatives

- Use READ COMMITTED SNAPSHOT ISOLATION (RCSI) — it reads from a versioned snapshot instead of dirty data:

ALTER DATABASE MyAppDB SET READ_COMMITTED_SNAPSHOT ON;Reads are non-blocking and consistent.

- Optimize queries and indexes to reduce blocking rather than skipping locks.

- Use

WITH (READPAST)only when intentionally skipping locked rows (e.g., queue systems).

What .NET engineers should know:

- 👼 Junior: Know

NOLOCKskips locks to speed up reads, but can return incorrect data. - 🎓 Middle: Understand real effects, dirty reads, missing or duplicated rows, and wrong aggregates. Know safer alternatives like

READ COMMITTED SNAPSHOT. - 👑 Senior: Avoid

NOLOCKin production code unless you absolutely understand the trade-off. Should know how to tune queries, isolation levels, and indexing to achieve concurrency without sacrificing data integrity.

🧩 Advanced SQL Patterns

❓ What is a CHECK constraint, and when would you use it?

A CHECK constraint enforces a rule on column values. It ensures that only valid data gets stored in the table. It’s evaluated every time a row is inserted or updated.

For example:

CREATE TABLE Employees (

Id INT PRIMARY KEY,

Age INT CHECK (Age >= 18),

Salary DECIMAL(10,2) CHECK (Salary > 0)

);

Here, the database won’t accept any employee under 18 or with a non-positive salary.

What .NET engineers should know:

- 👼 Junior: A CHECK constraint ensures only valid data is inserted, such as checking that age is above 18.

- 🎓 Middle: Use them to keep insufficient data out of the database. They’re great for static business rules that don’t depend on other tables.

- 👑 Senior: Keep constraints close to the data when rules are consistent and lightweight. Avoid overusing them for complex validations that change often; that logic belongs in the service layer.

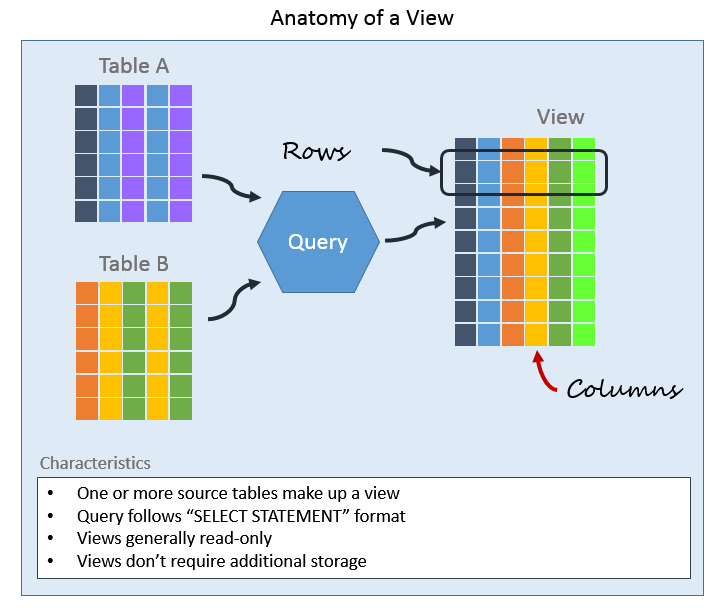

❓ When would you consider using a database view, and what are its limitations?

A view is a saved SQL query that acts like a virtual table. It doesn’t store data; it just stores the definition of a query that runs when you select from it. Views can simplify complex queries, improve security, and standardize access to data.

✅ When to use a view

1. Simplify complex queries

Instead of repeating joins or aggregations everywhere, wrap them in a view:

CREATE VIEW ActiveCustomers AS

SELECT Id, Name, Email

FROM Customers

WHERE IsActive = 1;Then use it efficiently:

SELECT * FROM ActiveCustomers;2. Encapsulate business logic

Centralize derived calculations to ensure multiple applications or reports share consistent results.

3. Improve security

Restrict users to specific columns or rows by granting access to the view instead of the base table.

4. Data abstraction layer

If table schemas change, you can preserve the exact view definition to avoid breaking queries in dependent applications.

⚠️ Limitations

1. Performance overhead

A view doesn’t store data; it re-runs the underlying query each time, which can be slow for heavy joins or aggregations.

2. Read-only in most cases

You usually can’t INSERT, UPDATE, or DELETE through a view unless it maps directly to a single base table (and even then, there are restrictions).

3. No automatic indexing

A view itself isn’t indexed, though you can create indexed/materialized views (if your DB supports them) to store results physically.

4. Maintenance complexity

If base tables change (column names are renamed or types are changed), views can break silently.

💡 When to avoid views

- For real-time, performance-critical queries, use materialized views or precomputed tables instead.

- When you need full CRUD operations.

- When application logic needs to shape data dynamically (views are static).

What .NET engineers should know:

- 👼 Junior: Know that a view is a saved SQL query that simplifies data access.

- 🎓 Middle: Understand how views help with abstraction and security, but can slow down complex queries.

- 👑 Senior: Use indexed/materialized views for performance, manage dependencies carefully, and monitor query plans for inefficiencies.

📚 Resources: Views SQL

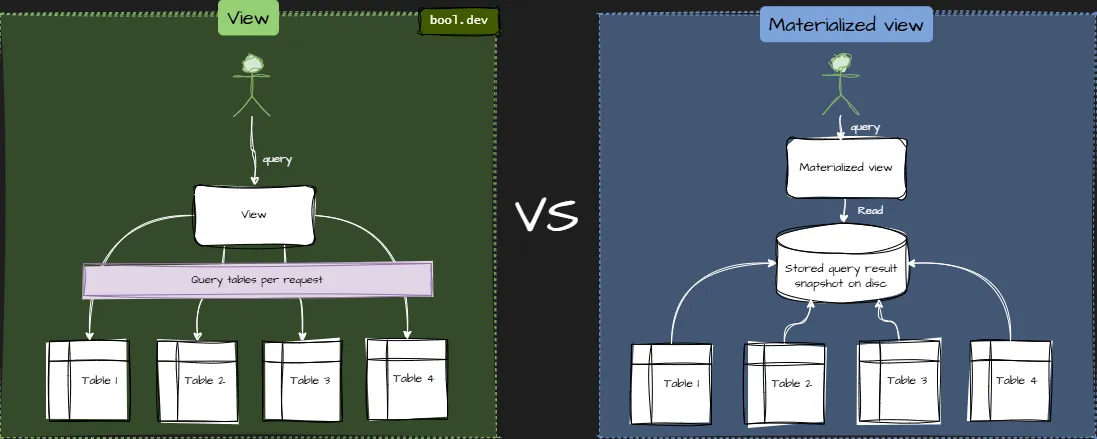

❓ What’s the difference between views and materialized views?

Both views and materialized views represent saved SQL queries, but they differ in how they handle data storage and performance.

| Feature | View | Materialized View |

|---|---|---|

| Data storage | Doesn’t store data — runs query on demand | Stores query result physically |

| Performance | Slower for complex joins/aggregates | Much faster for repeated reads |

| Freshness | Always current | Must be refreshed |

| Indexing | Can’t be indexed directly (except indexed views in SQL Server) | Can be indexed normally |

| Use case | Lightweight abstraction, security, reusable logic | Analytics, dashboards, reporting, read models |

Example (PostgreSQL):

-- Regular view

CREATE VIEW ActiveCustomers AS

SELECT * FROM Customers WHERE IsActive = TRUE;

-- Materialized view

CREATE MATERIALIZED VIEW CustomerStats AS

SELECT Region, COUNT(*) AS Total

FROM Customers

GROUP BY Region;Refresh when data changes:

REFRESH MATERIALIZED VIEW CustomerStats;You can schedule refreshes or trigger them via events.

💡 Performance tip:

- Materialized views reduce load on large joins and aggregations.

- You can index them, unlike regular views.

- But stale data remains a risk; choose refresh intervals wisely.

- Some databases (like PostgreSQL) support concurrent refreshes to avoid locking.

What .NET engineers should know:

- 👼 Junior: Views show real-time data; materialized views store precomputed data for speed.

- 🎓 Middle: Understand refresh trade-offs, materialized views are faster but can become stale.

- 👑 Senior: Design refresh strategies (scheduled, trigger-based, or event-driven) and decide between SQL Server indexed views or PostgreSQL materialized views for reporting and caching layers.

📚 Resources:

❓ What are the pros and cons of using stored procedures?

Stored procedures are precompiled SQL scripts stored in the database. They can encapsulate logic, improve performance, and simplify maintenance, but they can also make versioning, testing, and scaling more complex.

Stored Procedures Pros and Cons

- Pros: stable contract, plan caching, reduced wire traffic, centralized security/permissions, sometimes easier hot-fixing.

- Cons: portability and versioning friction, risk of duplicating domain logic in DB, and testing complexity.

Note: procs aren’t literally precompiled; execution plans are compiled on first use and cached. Use parameters; dynamic SQL inside a proc can still be injectable.

✅ When to use stored procedures:

- Data-heavy logic that benefits from server-side computation (reporting, aggregations, batch jobs).

- Security-critical operations that require strict validation at the database level.

- Systems with shared databases accessed by multiple services or tools.

❌ When not to:

- Application-level business logic that changes often.

- Microservices or DDD-based architectures: logic should live closer to the domain layer.

What .NET engineers should know:

- 👼 Junior: Stored procedures are reusable SQL scripts that run faster and help keep database logic secure.

- 🎓 Middle: They’re great for shared business rules and performance-critical operations, but hard to maintain in CI/CD pipelines.

- 👑 Senior: Use them strategically for data-heavy operations near the database layer. Avoid mixing too much app logic inside them; prefer code-based services when scalability, observability, or versioning matter.

📚 Resources: Stored procedures (Database Engine)

❓ How do you efficiently pass a list of values to a stored procedure in SQL Server versus PostgreSQL?

Both SQL Server and PostgreSQL can accept multiple values in a single call, but they handle them differently.

In SQL Server, you use Table-Valued Parameters (TVPs), while in PostgreSQL, you typically use arrays or unnest().

SQL Server: Table-Valued Parameters (TVP)

A TVP lets you pass a structured list (table) to a procedure.

You define a custom type once and reuse it.

-- Define a table type

CREATE TYPE IdList AS TABLE (Id INT);

-- Create stored procedure using TVP

CREATE PROCEDURE GetOrdersByIds @Ids IdList READONLY AS

BEGIN

SELECT * FROM Orders WHERE OrderId IN (SELECT Id FROM @Ids);

END;

-- Pass values from .NET

var ids = new DataTable();

ids.Columns.Add("Id", typeof(int));

ids.Rows.Add(1);

ids.Rows.Add(2);

using var cmd = new SqlCommand("GetOrdersByIds", conn);

cmd.CommandType = CommandType.StoredProcedure;

var param = cmd.Parameters.AddWithValue("@Ids", ids);

param.SqlDbType = SqlDbType.Structured;

cmd.ExecuteReader();- Pros: Fast, type-safe, avoids string parsing.

- Cons: SQL Server–specific.

PostgreSQL: Arrays and unnest()

PostgreSQL doesn’t have TVPs — instead, you can pass an array parameter and unpack it inside the query.

CREATE OR REPLACE FUNCTION get_orders_by_ids(ids int[])

RETURNS TABLE (order_id int, status text) AS $$

BEGIN

RETURN QUERY

SELECT o.id, o.status

FROM orders o

WHERE o.id = ANY(ids);

END;

$$ LANGUAGE plpgsql;

-- Call it

SELECT * FROM get_orders_by_ids(ARRAY[1, 2, 3]);You can also expand the array with unnest(ids) for joins or complex logic.

- Pros: Native, concise, and efficient.

- Cons: Doesn’t support structured types easily (only arrays of primitives).

What .NET engineers should know:

- 👼 Junior: Know that SQL Server and PostgreSQL handle lists differently — TVPs vs arrays. Understand that sending one significant parameter is better than concatenating strings like

'1,2,3'. - 🎓 Middle: Be able to create and use TVPs in SQL Server and arrays in PostgreSQL, understanding how to map them from .NET (

DataTable→ TVP,int[]→ array). - 👑 Senior: Know performance characteristics — batching vs TVP vs JSON, how to pass complex types (PostgreSQL composite types or JSONB), and when to offload this logic to the app layer.

📚 Resources:

❓ What’s the difference between temporary tables and table variables?

Temporary tables (#TempTable) and table variables (@TableVar) are used to store temporary data, but they differ in how they behave, how long they live, and how SQL Server optimizes them.

- Temporary tables act like real tables: they live in

tempdb, support indexes and statistics, and are visible to nested stored procedures. - Table variables live in memory (though also backed by

tempdb), don’t maintain statistics, and are scoped only to the current batch or procedure.

Example:

-- Temporary table

CREATE TABLE #TempUsers (Id INT, Name NVARCHAR(100));

INSERT INTO #TempUsers VALUES (1, 'Alice');

-- Table variable

DECLARE @Users TABLE (Id INT, Name NVARCHAR(100));

INSERT INTO @Users VALUES (2, 'Bob');Key Differences:

| Feature | #Temp Table | @Table Variable |

|---|---|---|

| Storage | tempdb | tempdb (lightweight) |

| Statistics | ✅ Yes | ❌ No (until SQL Server 2019+) |

| Indexing | ✅ Any index | Limited (PK/Unique only) |

| Transaction scope | ✅ Follows transaction | ❌ Not fully transactional |

| Recompilation | Can recompile for better plan | No recompile (fixed plan) |

| Performance | Better for large sets | Better for small sets |

| Scope | Session / connection | Batch / function |

What .NET engineers should know:

- 👼 Junior: Both store temporary data, but temporary tables are more flexible and better suited for larger datasets.

- 🎓 Middle: Use temporary tables when you need statistics or multiple joins. Use table variables for small lookups or for simple logic within a single procedure.

- 👑 Senior: Use temp tables for complex or large operations, TVPs for batch inserts from .NET, and monitor tempdb contention in high-load systems.

❓ How would you enforce a business rule like "a user can only have one active subscription" at the database level?

You can enforce it directly in the database using a filtered unique index or a trigger, depending on your database's capabilities.

Option 1: Unique filtered index (preferred)

If your database supports it (SQL Server, PostgreSQL), create a unique index that applies only when IsActive = 1.

CREATE UNIQUE INDEX UX_User_ActiveSubscription

ON Subscriptions(UserId)

WHERE IsActive = 1;This ensures that only one active subscription per user can exist; attempts to insert a second active one will fail automatically.

Option 2: Trigger-based validation

If your database doesn’t support filtered indexes, use a trigger to check before insert/update.

CREATE TRIGGER TR_EnsureSingleActiveSubscription

ON Subscriptions

AFTER INSERT, UPDATE

AS

BEGIN

IF EXISTS (

SELECT UserId

FROM Subscriptions

WHERE IsActive = 1

GROUP BY UserId

HAVING COUNT(*) > 1

)

BEGIN

ROLLBACK TRANSACTION;

RAISERROR('User cannot have more than one active subscription.', 16, 1);

END

END;This prevents commits that violate the business rule, regardless of how many concurrent requests hit the DB.

What .NET engineers should know:

- 👼 Junior: Know that database constraints can prevent invalid data even if the app misbehaves.

- 🎓 Middle: Understand filtered indexes and how they enforce conditional uniqueness.

- 👑 Senior: Design proper DB-level constraints and triggers to enforce rules safely under concurrency and heavy load.

📚 Resources:

❓ What are some strategies for efficiently paginating through a huge result set, as opposed to using OFFSET/FETCH?

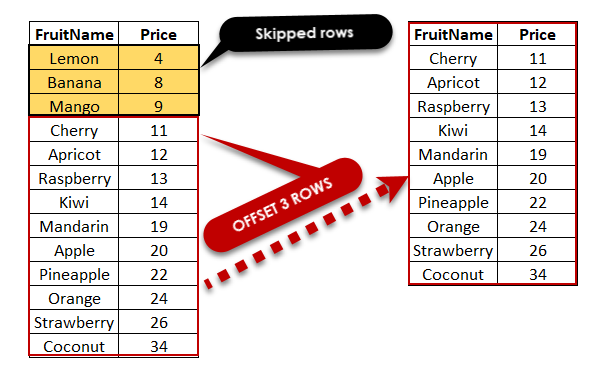

OFFSET/FETCH (or LIMIT/OFFSET) works fine for small pages, but it gets slower as the offset grows, the database still scans all skipped rows. For large datasets, you need smarter paging.

Better pagination strategies:

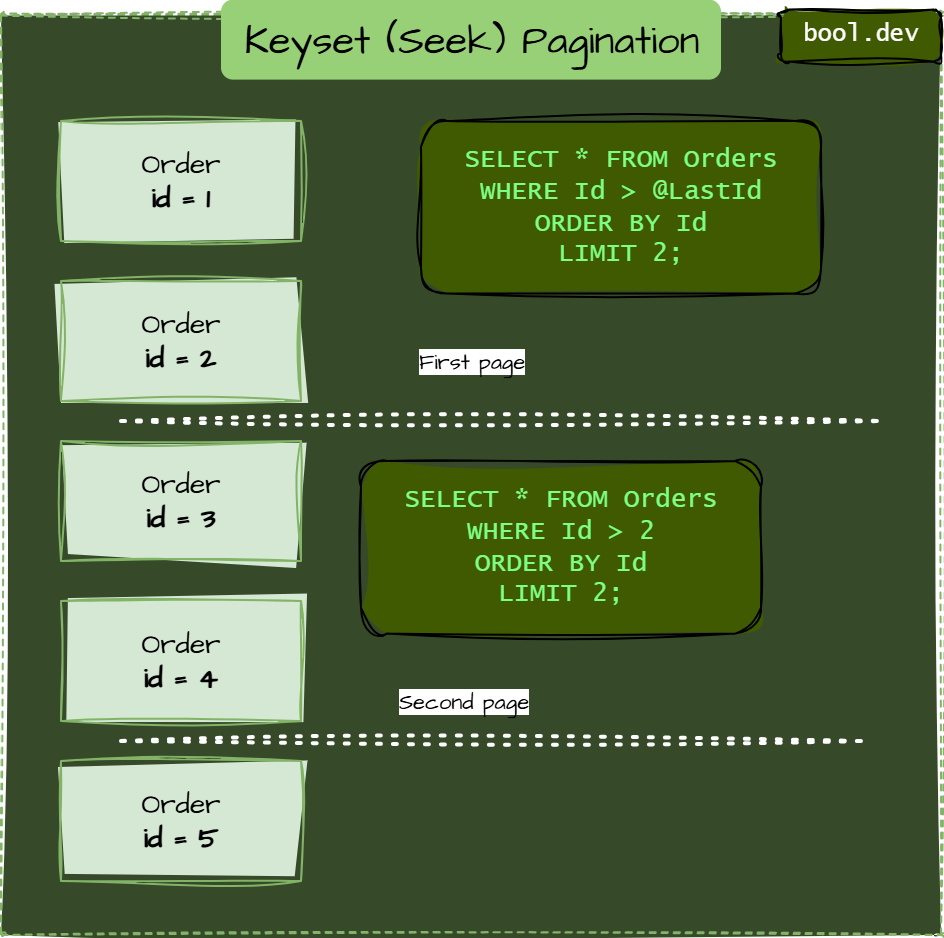

Keyset (Seek) Pagination

Use the last seen key instead of OFFSET.

SELECT * FROM Orders

WHERE Id > @LastId

ORDER BY Id

LIMIT 50;Fast because it uses an index and skips directly to the next page. Works only when your ID is sortable (is not suitable for UUID V4).

Bookmark Pagination

Used when sorting by multiple columns.

You remember the last record’s values and continue from them.

SELECT * FROM Orders

WHERE (OrderDate, Id) > (@LastDate, @LastId)

ORDER BY OrderDate, Id

FETCH NEXT 50 ROWS ONLY;

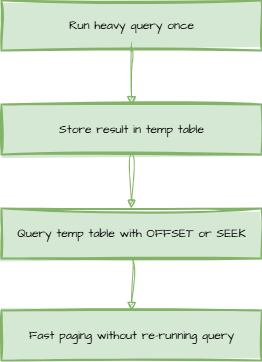

Precomputed / Cached Pagination /Snapshot pagination

For reports or exports, materialize results into a temp table or cache so paging doesn’t re-run the same heavy query each time.

Use proper index

Always ensure the ORDER BY columns are indexed, seek-based pagination relies on it.

What .NET engineers should know:

- 👼 Junior: Know that

OFFSETgets slower on big tables. - 🎓 Middle: Use keyset pagination for large or live data.

- 👑 Senior: Combine seek-based paging with caching, filtering, or precomputed datasets for scalable APIs.

📚 Resources: Pagination Strategies

❓ What is a trigger, and when should it be avoided?

A trigger is a special stored procedure that runs automatically in response to certain database events, such as INSERT, UPDATE, or DELETE. Triggers are useful for enforcing rules or auditing changes, but they can make logic hard to trace, debug, and maintain, especially when multiple triggers chain together.

Example:

CREATE TRIGGER trg_AuditOrders

ON Orders

AFTER INSERT, UPDATE

AS

BEGIN

INSERT INTO OrderAudit (OrderId, ChangedAt)

SELECT Id, GETDATE()

FROM inserted;

END;This trigger automatically logs every order creation or update.

When to Use:

- Enforcing data integrity rules not covered by constraints.

- Creating audit trails or history logs.

- Handling cascading actions (e.g., soft deletes).

When to Avoid:

- When business logic can live in the application layer.

- When triggers create hidden side effects that confuse other developers.

- When performance or scalability matters, triggers can cause recursive updates and slow down bulk operations.

What .NET engineers should know:

- 👼 Junior: Triggers run automatically after data changes, good for enforcing rules, but easy to misuse.

- 🎓 Middle: Use triggers mainly for auditing or strict integrity constraints. Avoid complex logic or multiple triggers on the same table.

- 👑 Senior: Keep the database lean, business rules belong in services, not triggers. If auditing is needed, prefer CDC (Change Data Capture), temporal tables, or event-based approaches for transparency and scalability.

📚 Resources: CREATE TRIGGER (Transact-SQL)

❓ How can you implement audit logging using SQL features?

Audit logging tracks who changed what and when in your database.

You can implement it using built-in SQL features like triggers, CDC (Change Data Capture), or temporal tables, depending on how detailed and real-time your auditing needs are.

Triggers (manual auditing):

CREATE TRIGGER trg_AuditOrders

ON Orders

AFTER UPDATE

AS

BEGIN

INSERT INTO OrderAudit (OrderId, ChangedAt, ChangedBy)

SELECT Id, GETDATE(), SUSER_SNAME()

FROM inserted;

END;- Pros: Simple to set up

- Cons: Harder to maintain, can affect performance

Change Data Capture (CDC):

EXEC sys.sp_cdc_enable_table

@source_schema = 'dbo',

@source_name = 'Orders',

@role_name = NULL;- Pros: Automatically tracks all changes