Best Practices for Effective Software Architecture Documentation

Software architecture documentation is critical for long-term success. It helps teams stay aligned, speeds up onboarding, and guides decision-making. Proper documentation makes it easier to maintain, scale, and evolve systems.

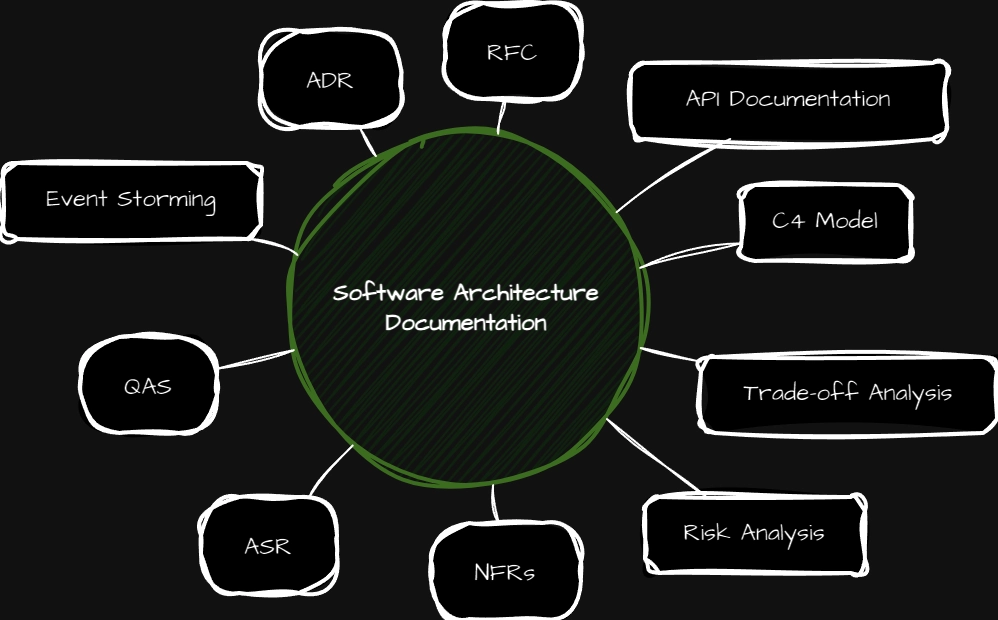

In this article, we will cover key techniques like:

- Architectural Decisions (ADRs, RFCs, Trade-offs)

- Modeling and Visualization (Event Storming, C4)

- Non-Functional and Quality Attributes (NFRs, QAS)

- Risk and Analysis (Risk Management, Trade-off Analysis)

- API Documentation

1.📝 Architecture Decision Records (ADR)

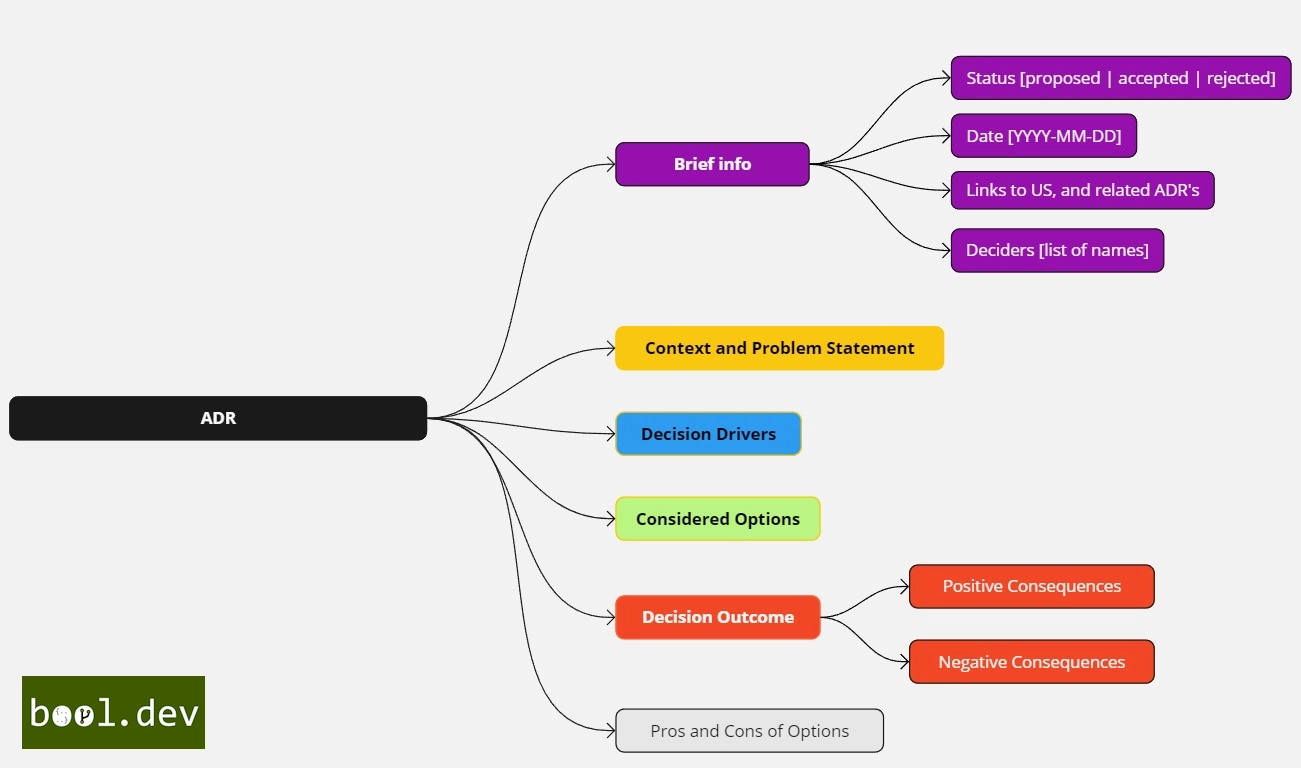

What are ADRs?

ADRs document key architectural decisions in a project. They capture the reasoning behind decisions, ensuring future clarity on why certain paths were chosen.

How to Use ADRs:

- When to Create: ADRs should be created when decisions involve significant architecture changes or trade-offs. Examples include migrating databases, adopting a new framework, or moving from monolithic to microservices.

- What to Include: Document the context, decision made, alternatives considered, and potential consequences.

- Who Maintains ADRs: The development team maintains and updates ADRs as architecture evolves.

Best Practices:

- Keep ADRs concise and focused on decisions, not technical implementation details.

- Store ADRs in version control (e.g., Git) to track changes over time.

- Update and Maintenance of ADRs: Since the ADR is a Live document, remember to update it when the architecture was changed.

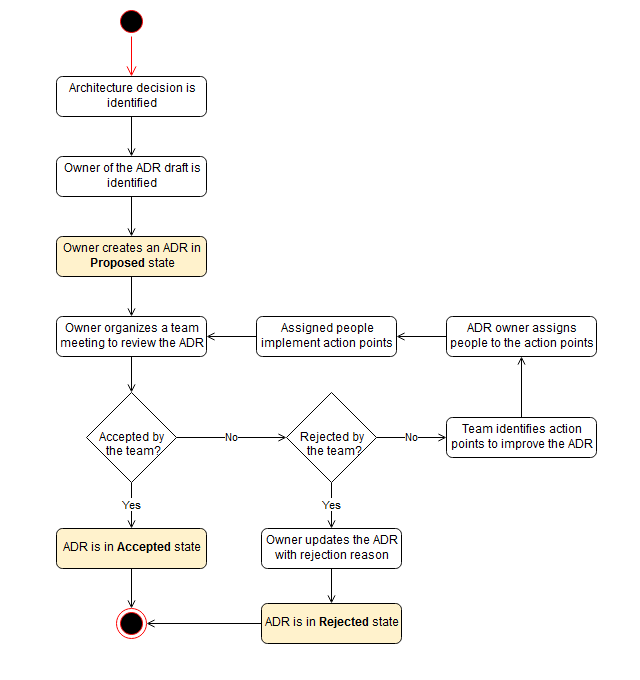

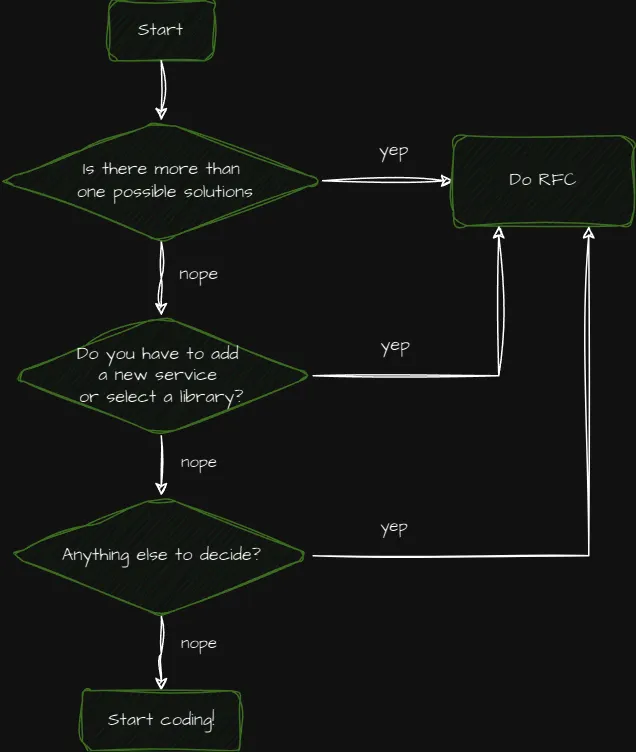

ADR process:

ADR Templates:

Here is a list of popular ADR templates:

- ADR template by Michael Nygard - simple and popular

- ADR template by Jeff Tyree and Art Akerman - more sophisticated.

- ADR template for Alexandrian pattern - simple with context specifics.

- ADR template for business case - more MBA-oriented, with costs, SWOT, and more opinions.

- ADR template of the Markdown Any Decision Records (MADR) project - I'd like this option, as it includes options and Pros and Cons of the Options that give more context for the people who will read the ADRs.

- ADR template using Planguage - more quality assurance oriented.

ADR example using MADR template:

# Use 0.0.0 as initial unreleased version number

* Status: accepted

* Date: 2020-03-31

## Context and Problem Statement

Approaches like semantic versioning use the presence or absence of an initial version

to determine the next version to publish. So determining this initial unreleased version

upon repository creation determines what the first release version will be.

## Considered Options

* No version, and the first version released will be `1.0.0`.

* `0.0.0`, and the first version released will be `0.1.0`.

## Decision Outcome

Chosen option: `0.0.0`, because `1.0.0` strongly indicates a mature code that is ready

for general use. One might eventually (and exceptionally) break the semantic versioning

rule by upgrading the first mature version to `1.0.0` (as done by

[`poetry`](https://python-poetry.org/history/)), but must follow strictly the

versioning rules afterwards.ADR reference

- ADR in Microsoft Azure well-architected framework

- https://adr.github.io/ad-practices/

- ADR process by Amazon

2. 💬Requests for Comments (RFC)

What are RFCs?

RFCs are documents used to propose, discuss, and formalize key technical decisions. They allow teams to gather feedback before finalizing significant changes or architectural choices.

How to Use RFCs:

- When to Create: Write an RFC when you need team-wide input on a major decision or change, e.g.: adopting a new microservices pattern.

- What to Include: Describe the problem, propose a solution, and provide reasoning for the proposed approach.

- Who Participates: All relevant stakeholders (developers, architects, and sometimes non-technical) should contribute.

Best Practices:

- Keep RFCs focused on one major decision to avoid overwhelming discussions.

- Make RFCs publicly accessible within the team (e.g., using a shared document or a platform like Confluence).

- Set deadlines for feedback to keep the process moving forward.

- Finalize the RFC after gathering input, and store it for future reference.

- Be sure to gather feedback from cross-functional teams, including security, quality assurance, and DevOps, to ensure all perspectives are considered.

Example RFC Outline:

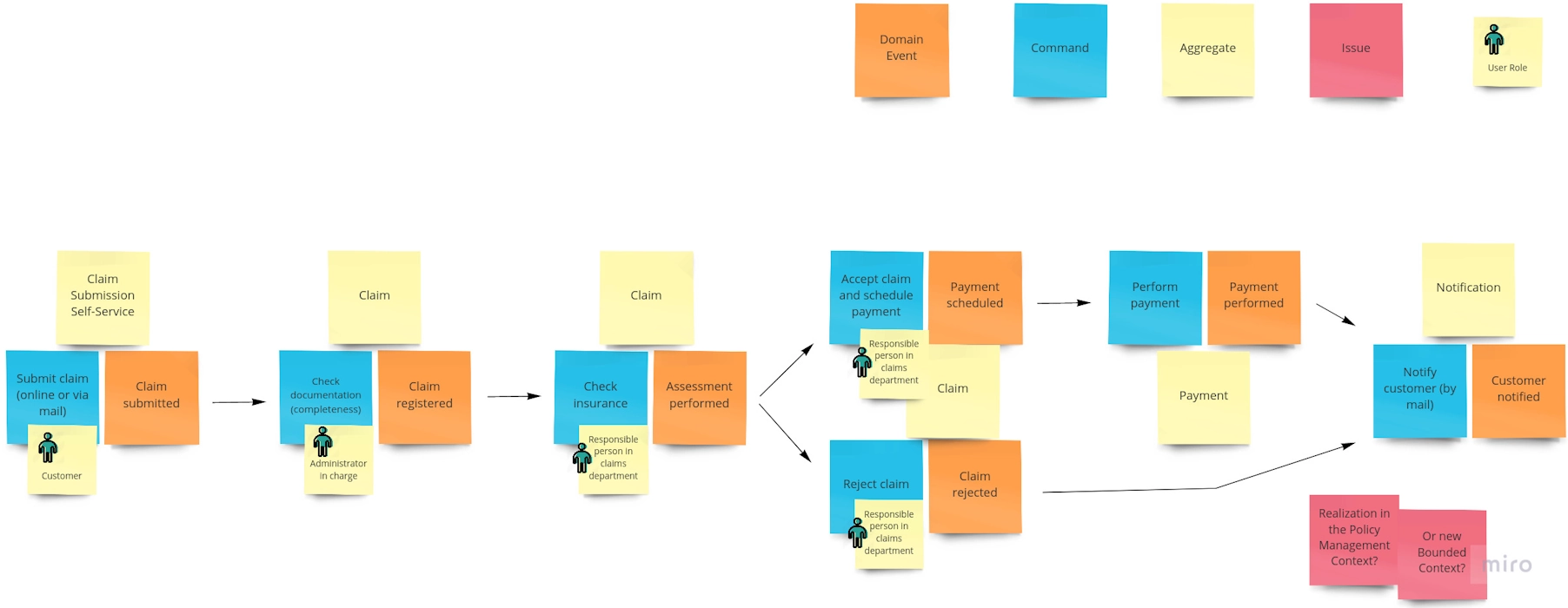

3.🎯 Event Storming

What is Event Storming?

Event Storming is a collaborative workshop technique that helps teams explore and understand complex business domains. Event Storming involves mapping domain events—key system occurrences—to visualize workflows and processes.

How It Helps:

- Visualizes Complex Processes: Provides a clear picture of how different parts of the system interact.

- Encourages Collaboration: Brings together developers, domain experts, and stakeholders to share knowledge.

- Identifies Hidden Requirements: Uncovers insights that might be missed in traditional documentation.

- Defines Bounded Contexts: Helps identify areas of responsibility within the system, which is crucial for domain-driven design.

Best Practices for Using Event Storming in Architecture Documentation:

- Assemble a Diverse Team: Include developers, domain experts, and stakeholders with varied perspectives.

- Use Simple Tools: Stick to sticky notes and markers or simple digital tools to keep the focus on ideas, not the tooling.

- Start with Domain Events: Begin by identifying key events that occur within the business domain.

- Keep Language Non-Technical: Use everyday language to ensure everyone understands.

- Iterate and Refine: Revisit the event flow to add details or correct misunderstandings.

- Document the Outcomes: Capture the final model in your architecture documentation, possibly integrating it with C4 diagrams.

- Link to Non-Functional Requirements: Use insights to address performance, scalability, and other NFRs.

- Identify Risks and Trade-offs: Document any potential issues discovered during the session.

Integrating Event Storming with Documentation:

- Update ADRs: Reflect any architectural decisions influenced by the Event Storming session.

- Enhance C4 Models: Use the insights to refine your system diagrams.

- Inform RFCs: Incorporate findings into Requests for Comments for further team input.

References

- Introducing EventStorming (Book)

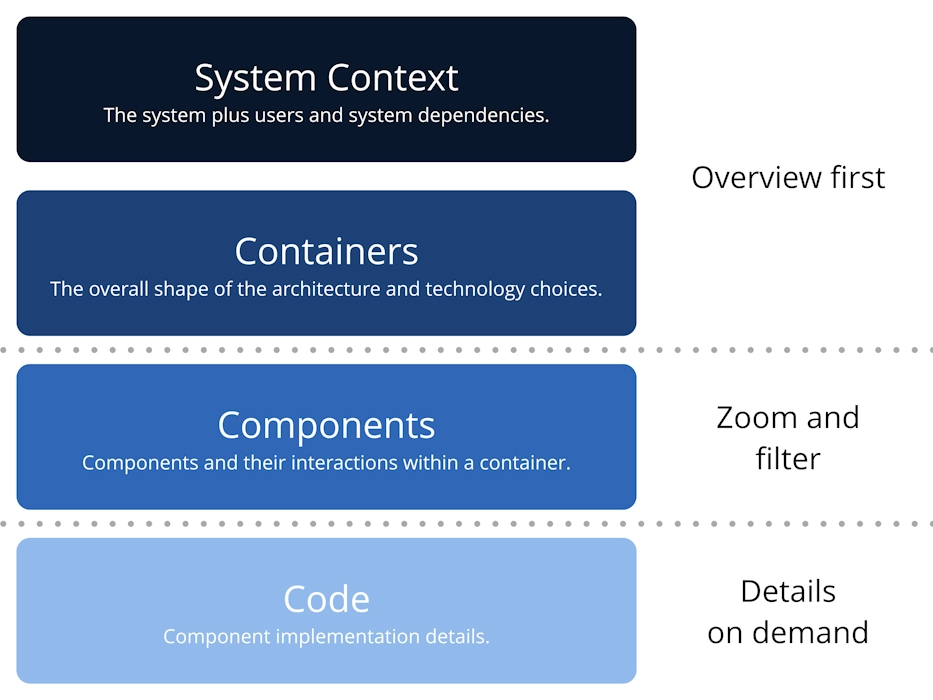

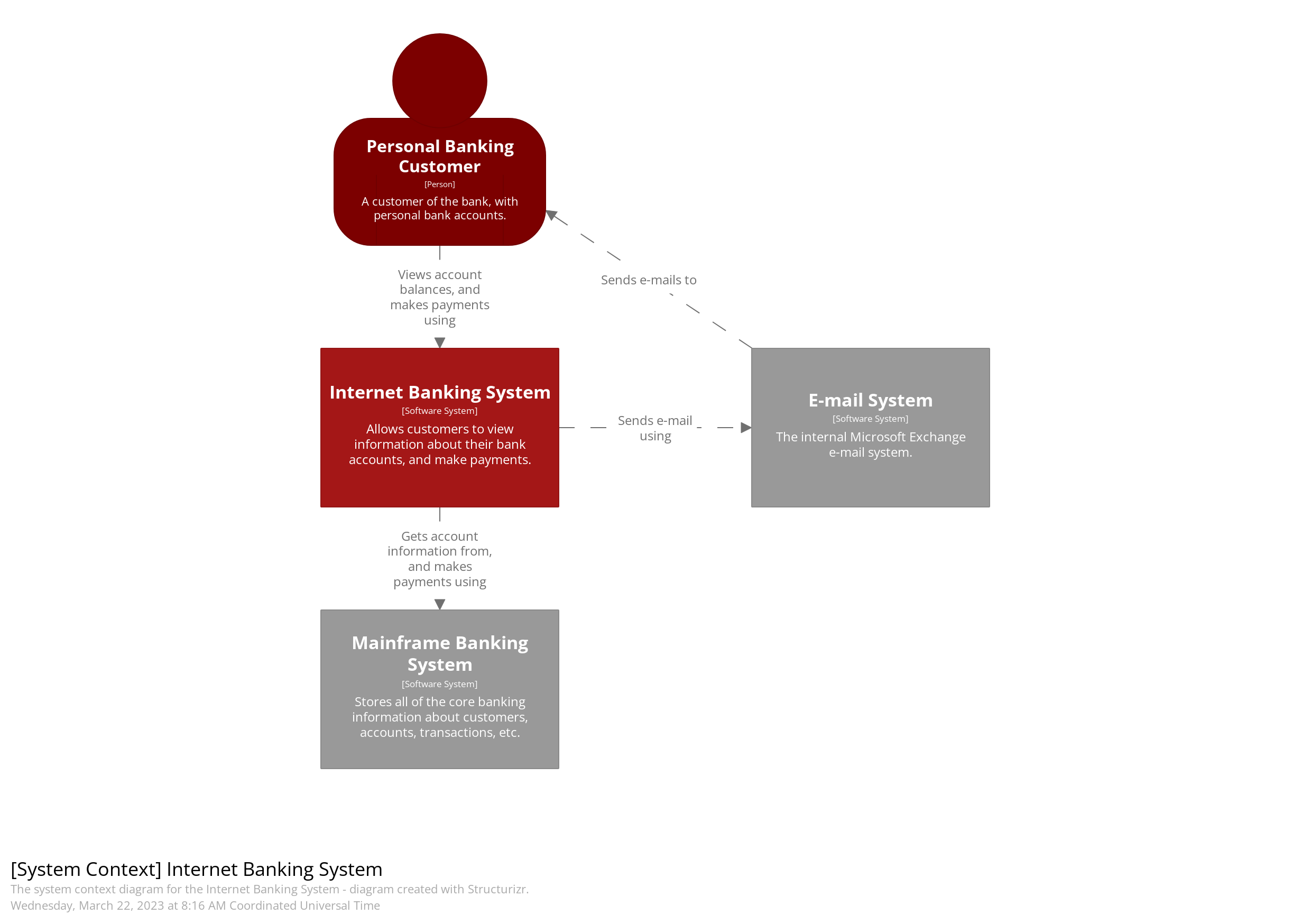

4.📊 C4 Model

What is the C4 Model?

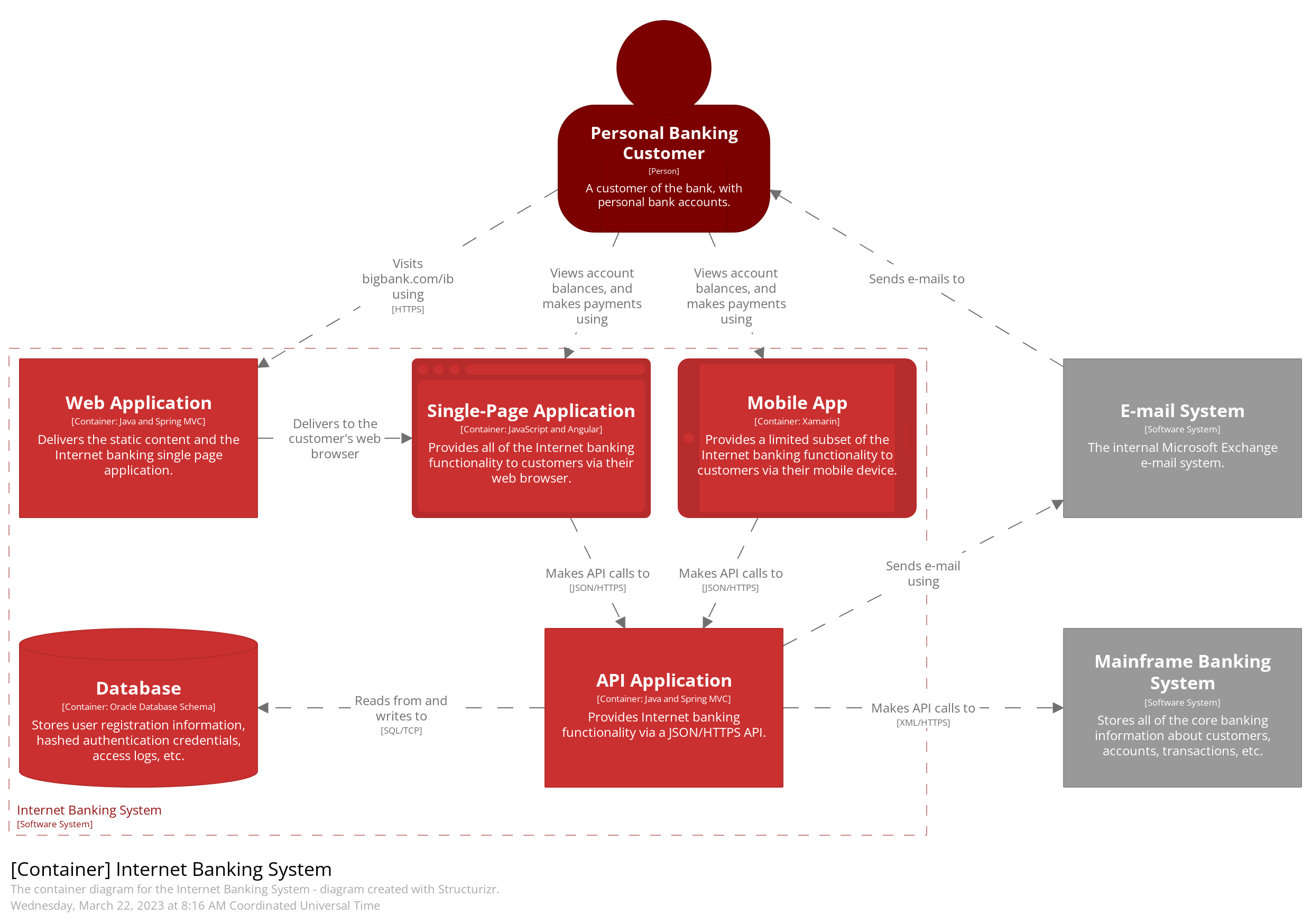

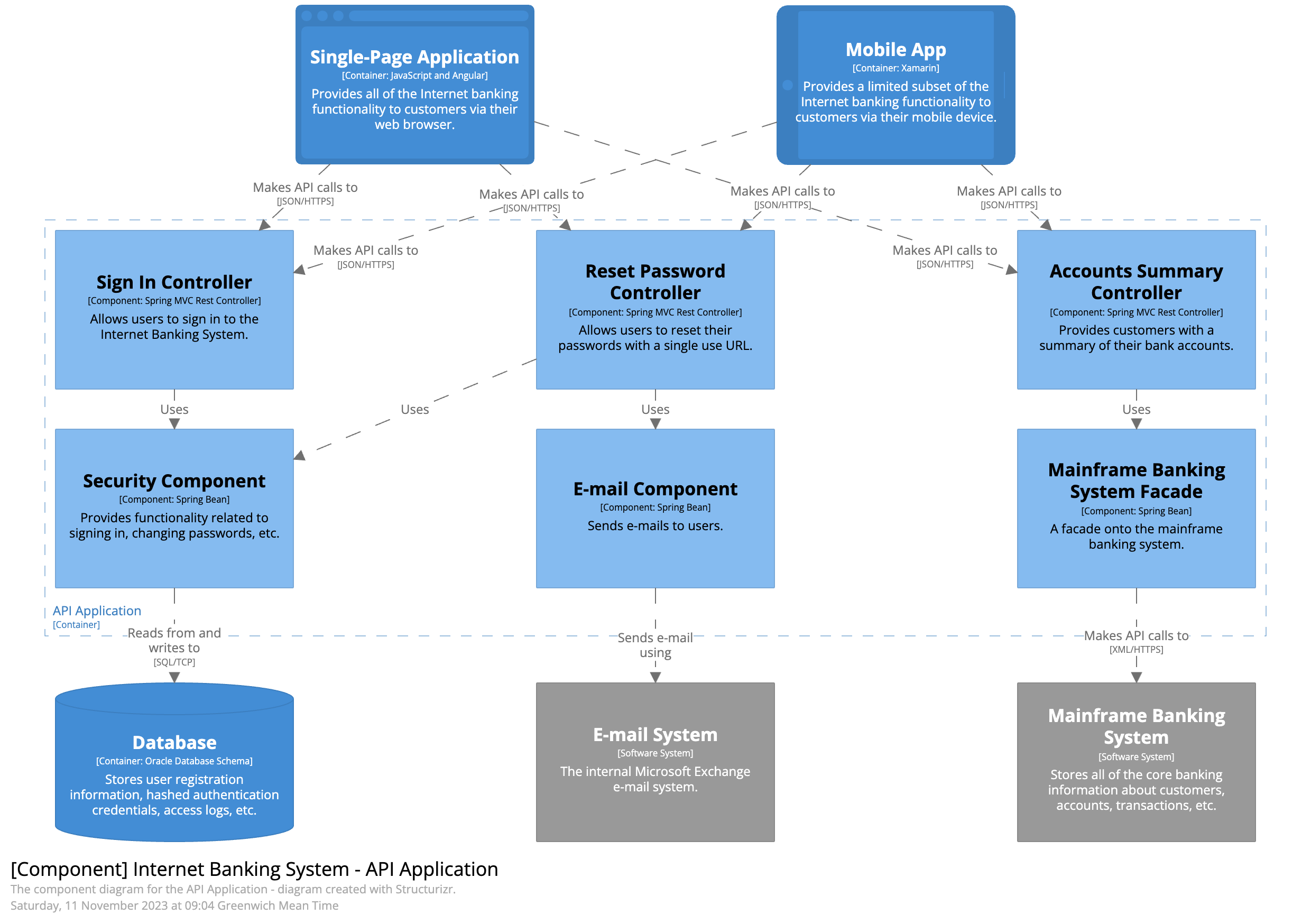

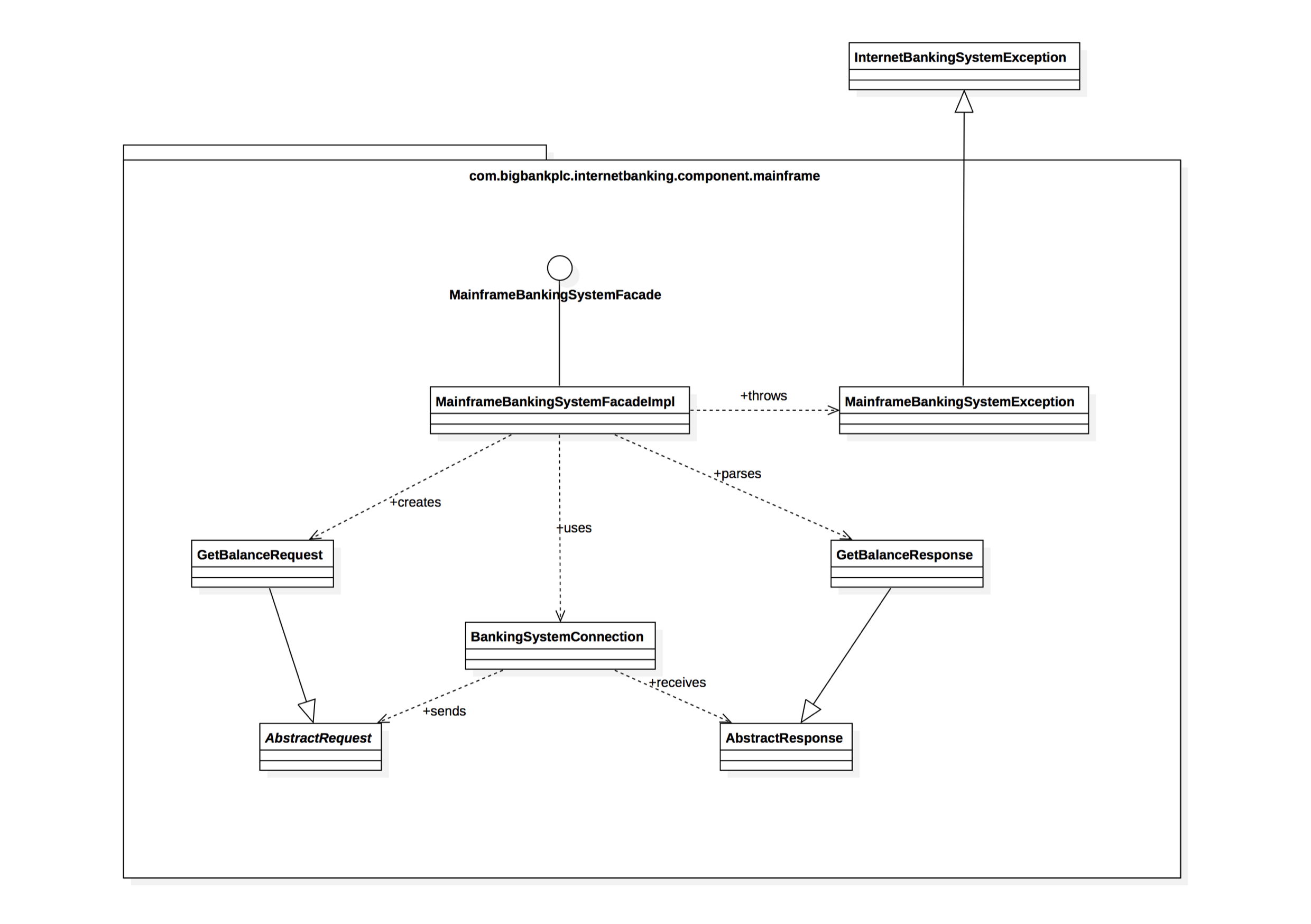

The C4 Model is a simple yet powerful way to visualize software architecture by breaking it into four levels: Context, Container, Component, and optionally, Code. It helps both technical and non-technical stakeholders understand the system at different levels of detail.

Context Diagram:

- Purpose: Provides an overview of the system in its environment, showing how it interacts with external users and systems.

- Who It's For: Suitable for both technical and non-technical stakeholders.

- Best Practice: Start with this high-level view to explain the system's role and its external interactions. Keep it simple and easy to understand.

Container Diagram:

- Purpose: Break the system into containers such as applications, databases, and services, and show their interactions.

- Who It's For, Ideal for technical team members, but still understandable to non-technical stakeholders.

- Best Practice: Use this diagram to show how the system is architected on a higher level, focusing on containers and communication between them. It’s useful for both onboarding developers and clarifying system boundaries to stakeholders.

Component Diagram:

- Purpose: Zooms into individual containers to show the components within, detailing their responsibilities and interactions.

- Who It's For: Primarily for developers and architects who need to understand how the system is built internally.

- Best Practice: Only create Component Diagrams for the most critical parts of the system. These diagrams are technical, so focus on what is necessary for system development and maintenance.

Code Diagram:

- Purpose: Offers a detailed look into the internal code structure of specific components.

- Who It's For: Only for developers or architects who need to understand the exact code-level details.

- Best Practice: Avoid creating Code Diagrams unless needed for complex or critical codebases. Most teams can skip this level to avoid unnecessary complexity.

When to Go Into Detail:

- Use Context Diagrams to explain the system to everyone, including non-technical stakeholders like product managers and clients.

- Go deeper with Container Diagrams when you need to discuss the architecture of the system with technical teams.

- Create Component Diagrams when individual parts of the system need detailed technical explanations for developers.

- Reserve Code Diagrams for the rare cases where extremely granular documentation is required.

Integrating with Other Documentation:

- ADRs and RFCs: Use the C4 Model alongside ADRs and RFCs to show how decisions influence system components. For example, link an ADR to a container or component that was affected by a decision.

- Keep Diagrams Updated: Regularly update C4 diagrams as the system evolves. Store them in a version control system like Git or a shared documentation platform like Confluence.

Most Popular Tools for Creating C4 Diagrams

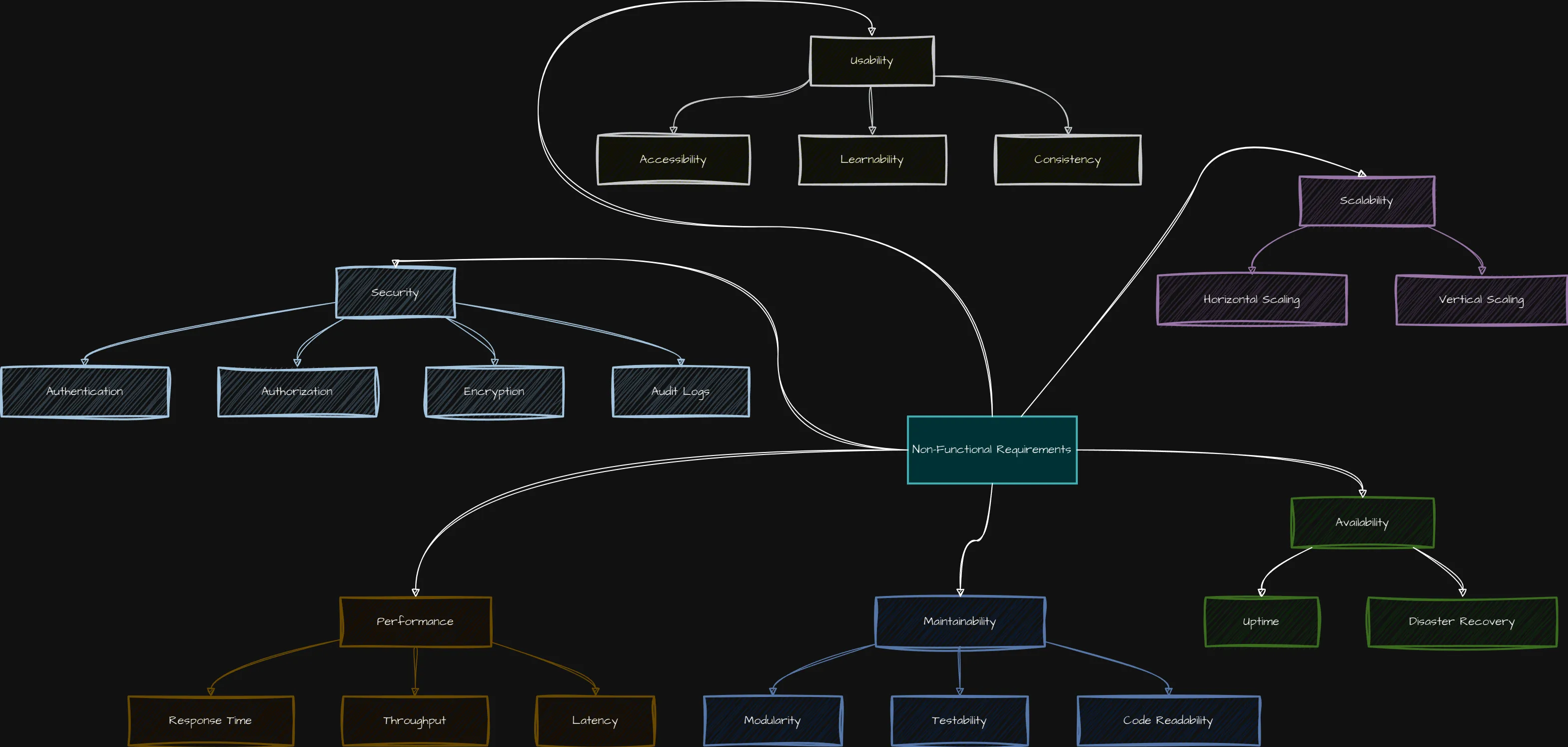

5. ⚙️Non-Functional Requirements (NFRs)

Non-Functional Requirements (NFRs) define how the system should perform under certain conditions, focusing on qualities like performance, scalability, security, and availability.

Why NFRs Matter:

- Performance: Ensures the system can handle required loads (e.g., response time, throughput).

- Scalability: Defines how the system can grow or shrink depending on load (horizontal or vertical scaling).

- Security: Outlines protection against threats, including authentication, encryption, and compliance.

- Availability & Reliability: Specifies the uptime requirements and how the system handles failures (e.g., disaster recovery).

How to Document NFRs:

- Link NFRs to Architecture: Include NFRs in the architecture design, showing how components support performance, security, and scalability.

- Linking NFRs to Specific Components: Ensure that each NFR is associated with the relevant system components, using C4 diagrams to visualize how different parts of the system meet NFR requirements.

- Set Measurable Metrics: NFRs should be as specific and measurable as possible. For instance, a performance NFR might state: 'The system must handle 10,000 concurrent users with a response time below 200ms".

- Use ADRs for NFR-related Decisions: When architectural decisions impact performance, security, or scalability, document those decisions in ADRs.

References

6. 🧪 Quality Attribute Scenarios (QAS)

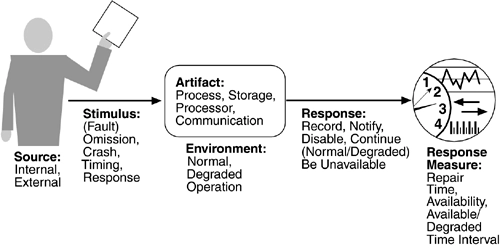

What are Quality Attribute Scenarios?

Quality Attribute Scenarios are specific, measurable, and testable descriptions of how a system should perform in various situations. These scenarios help define and document the system's non-functional requirements (NFRs), such as performance, scalability, security, usability, and availability.

Each scenario follows a structured format, ensuring system qualities are explicitly defined, measurable, and verifiable. This ensures that both the development team and stakeholders have a clear understanding of how the system should behave under different conditions.

Why important to document QAS:

- Clarifies Non-Functional Requirements (NFRs): Quality Attribute Scenarios transform abstract requirements into concrete, testable scenarios.

- Aligns Expectations: They provide clarity to both technical and non-technical stakeholders on how the system should perform, behave, and scale.

- Measurable and Testable: Scenarios help identify specific metrics to track during testing, ensuring that the system meets its non-functional goals.

Quality Attribute Scenarios format:

A standard Quality Attribute Scenario consists of six parts:

- Source of Stimulus: Who or what initiates the scenario (e.g., user, external system)?

- Stimulus: The event that triggers the scenario (e.g., system load, data request).

- Environment: The context in which the event occurs (e.g., normal operation, under load, during maintenance).

- Artifact: The part of the system affected by the stimulus (e.g., database, API, user interface).

- Response: The system’s behavior or action in response to the stimulus (e.g., the system scales, provides a response, or fails).

- Response Measure: How the system’s response is measured (e.g., latency, throughput, number of users, recovery time).

Fitness Functions for Measuring Quality Attribute Scenarios

A fitness function is a measurable mechanism used to test whether a system meets the criteria outlined in a Quality Attribute Scenario (QAS). These functions provide clear metrics and automated or manual processes to assess the system’s behavior. Fitness functions ensure that the system’s architecture continues to meet Non-Functional Requirements as it evolves.

Fitness functions are typically implemented as automated tests, performance benchmarks, or manual inspections and become a part of continuous integration (CI) or testing processes to validate that the architecture adheres to defined quality standards.

Examples of Quality Attribute Scenarios

1. Quality Attribute Scenario for Performance

Scenario:

- Source of Stimulus: A user initiates a request to retrieve data.

- Stimulus: The request occurs under normal operating conditions.

- Environment: The system is under normal load.

- Artifact: API endpoint responsible for serving data.

- Response: The system retrieves and returns the data.

- Response Measure: The system must respond in less than 200 milliseconds.

Fitness Function:

- Automated Performance Tests: Write automated tests that simulate user requests under normal load and measure the response time.

- Example Test Tool: Use tools like Apache JMeter or Gatling to continuously monitor the response time.

- Pass Criteria: The fitness function ensures the response time remains under 200ms. If response times exceed this, the test fails, alerting the team to performance degradation.

2. Quality Attribute Scenario for Scalability

Scenario:

- Source of Stimulus: A surge of 1,000 new users per minute.

- Stimulus: A sudden increase in user registrations.

- Environment: The system is under peak load conditions.

- Artifact: The user registration service.

- Response: The system scales horizontally to handle increased load.

- Response Measure: The system maintains a response time of under 500 milliseconds with no downtime.

Fitness Function:

- Load Testing Tool: Implement load testing tools (e.g., K6 or Locust) to simulate high traffic and monitor the system’s scaling behavior.

- Example Test: The fitness function measures the response time under simulated load (e.g., increasing user requests to mimic peak conditions).

- Pass Criteria: The function continuously checks if the system maintains a response time below 500 milliseconds as it scales to handle more users.

3. Quality Attribute Scenario for Security

Scenario:

- Source of Stimulus: A malicious user attempts unauthorized access.

- Stimulus: The attempt occurs during normal operations.

- Environment: The system is functioning normally.

- Artifact: The authentication and authorization subsystem.

- Response: The system detects the unauthorized attempt and denies access.

- Response Measure: Unauthorized access is prevented within 5 seconds, and an alert is sent to the admin.

Fitness Function:

- Security Penetration Tests: Use automated penetration testing tools (e.g., OWASP ZAP, Burp Suite) to simulate security attacks and measure the system’s ability to detect and block unauthorized access.

- Example Test: Run security scans that attempt various attack vectors (e.g., SQL injection, brute force) to ensure unauthorized access is denied.

- Pass Criteria: The system must detect and block attacks within the specified 5-second window, and alerts must be sent to the administrator. Failure to do so triggers a warning for developers to review security mechanisms.

4. Quality Attribute Scenario for Availability

Scenario:

- Source of Stimulus: A system failure occurs (e.g., server crash).

- Stimulus: The failure happens during peak traffic.

- Environment: The system is experiencing high load.

- Artifact: Web application backend.

- Response: The system must failover to backup servers within 1 minute to avoid downtime.

- Response Measure: The system must have a recovery time objective (RTO) of less than 60 seconds.

Fitness Function:

- Automated Failover Tests: Use continuous monitoring and recovery testing tools (e.g., Chaos Monkey from Netflix’s Simian Army) to simulate failures and validate the failover process.

- Example Test: The fitness function runs automated failure scenarios where servers crash and ensures the backup infrastructure activates within the required time frame.

- Pass Criteria: The system should recover in under 60 seconds with no visible impact to users. Any failures to meet this timeline would flag the architecture for review.

References

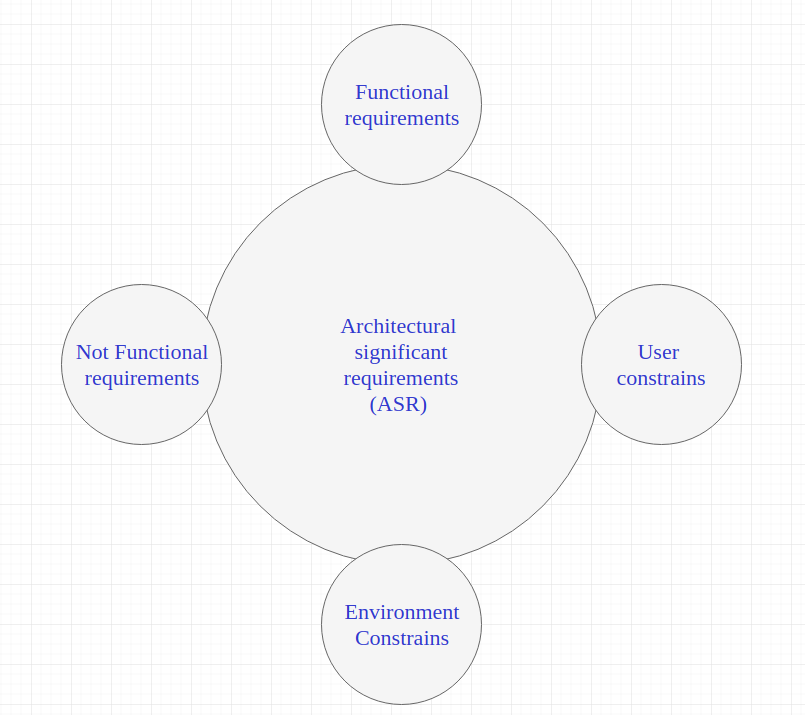

7. ♟️ Architecture Significant Requirements (ASR)

What are Architecture Significant Requirements?

ASRs are specific requirements—both functional and non-functional—that directly and majorly influence the architecture design. They often include critical business, regulatory, and technical requirements that dictate how the system must be structured to meet certain goals.

Key Characteristics of ASRs:

- Importance: ASRs are considered "significant" because they directly affect key architectural decisions. If not addressed correctly, the architecture may fail to meet the system's core objectives.

- Higher Priority: ASRs are typically high-priority requirements that can significantly impact the system's success.

- Broad Scope: ASRs usually include functional and non-functional requirements, whereas NFRs are specifically non-functional.

ASR Contains:

- Functional requirements

- NFRs

- Environment Contains

- User constraints

Examples of ASR's:

- Compliance: The system must comply with GDPR and provide audit trails for data handling.

- Business-Critical Performance: The system must handle 1 million users daily with zero downtime.

- Integration: The system must integrate with a third-party payment gateway in real time.

- Platform Constraint: The system must deploy on Microsoft Windows XP and Linux.

- Audit trail: The system must record every modification to customer records for audit purposes.

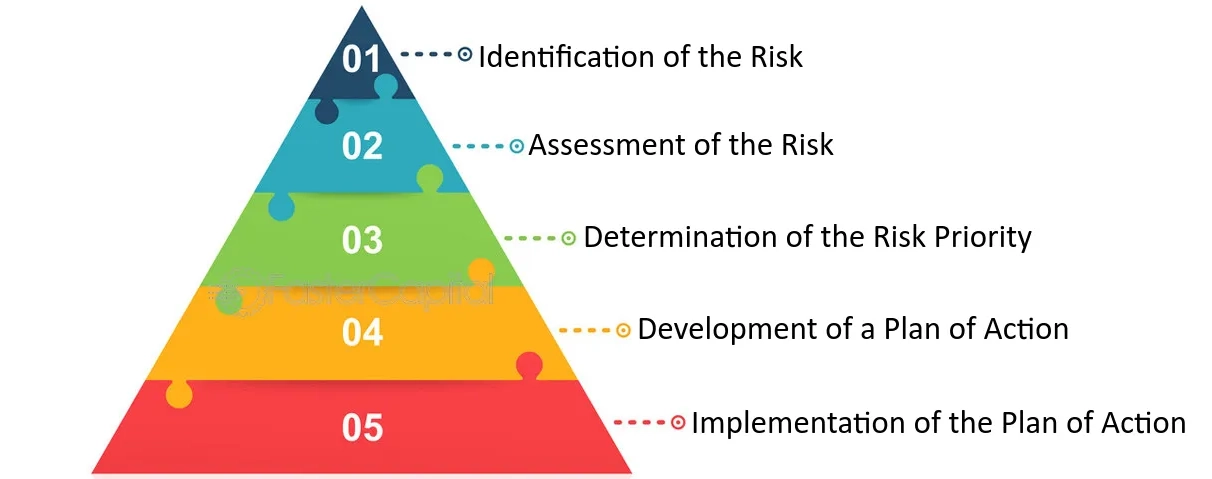

8. 🔍 Risk Analysis

What is Risk Analysis?

Risk analysis in software architecture is the process of identifying potential problems that could negatively affect the system and planning mitigation strategies. By proactively addressing risks, teams can ensure the system remains stable and resilient.

Why Risk Analysis important:

- Proactive Planning: Identifying risks early allows teams to prevent or minimize their impact.

- System Resilience: Ensures the system remains operational under stress or unexpected conditions.

- Stakeholder Communication: Aligns expectations by clearly outlining potential risks and their consequences.

How to Document Risks:

- Include in ADRs or Separate Documentation: For each significant architectural decision, document associated risks and their potential impact.

- Categorize Risks: Common categories include performance, security, scalability, availability, and compliance.

- Mitigation Plans: Document how risks will be addressed. For instance, a performance risk could be mitigated with caching or horizontal scaling.

Risk Matrix Example:

| Risk ID | Risk | Effect | Impact | Priority | Mitigation | Effect | Impact | Priority |

|---|---|---|---|---|---|---|---|---|

| R001 | Performance Degradation | System slowdown under high load, affecting user experience | H | H | Implement caching, optimize queries | Reduced load issues, improved performance | M | M |

| R002 | Security Breach | Potential data theft or system compromise | E | H | Use encryption, conduct regular security audits | Higher security, reduced breach likelihood | L | L |

Explanation of Columns:

- Risk ID: Unique identifier for each risk.

- Risk: The potential risk to the system or project.

- Effect: The potential impact of the risk before any mitigation.

- Impact (E/H/M/L): The severity of the risk based on TOGAF's categories: Extremely High (E), High (H), Moderate (M), and Low (L).

- Priority: The urgency of addressing the risk before mitigation.

- Mitigation: The steps taken to reduce the risk.

- Effect After Mitigation: The adjusted potential impact after mitigation strategies are applied.

- Impact After Mitigation (E/H/M/L): Reassessment of the risk level after mitigation.

- Priority After Mitigation: The new priority after mitigation efforts are implemented.

References

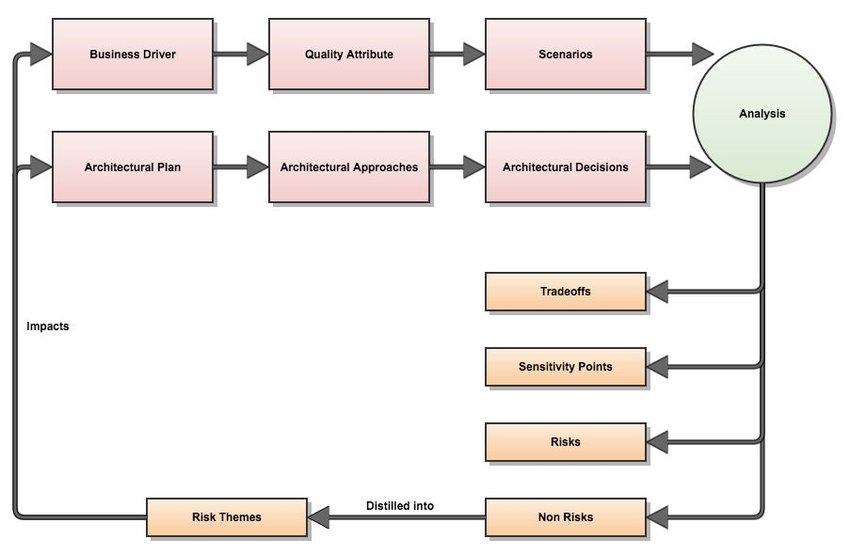

9. 🕵️ Trade-off Analysis

What is Trade-off Analysis?

Trade-off analysis in software architecture involves weighing the pros and cons of different design decisions. Every choice in architecture (e.g., performance vs. scalability, cost vs. flexibility) comes with trade-offs, and documenting these ensures that the reasoning behind decisions is clear for current and future teams.

Why Trade-off Matters:

- Informed Decision-Making: Teams need to make choices based on the overall project goals, understanding both benefits and drawbacks.

- Transparency: It helps stakeholders, including non-technical members, understand why certain decisions were made.

- Future Reference: As the system evolves, future developers can refer to documented trade-offs to understand the context of past decisions.

How to Document Trade-offs:

- Document in ADRs: Each ADR could include a section for trade-offs, explaining the consequences of the decision.

- Consider Alternatives: List the alternative solutions considered and describe why they were not chosen, along with their trade-offs.

- Link to Risk Analysis: Connect the trade-offs to the risks identified in your risk matrix, showing how different options impact the overall system.

References for Trade-off Analysis

- Integrating the Architecture Tradeoff Analysis Method (ATAM) with the Cost Benefit Analysis Method (PDF)

- Risk themes from ATAM data: preliminary results (PDF)

- TOGAF: Architecture Alternatives and Trade-Offs (Article)

Example of Trade-off Analysis

Simplified trade-off analysis:

## Trade-offs

- **Pros of Microservices:**

- **Scalability:** Easier to scale individual services as needed.

- **Flexibility:** Services can be developed and deployed independently.

- **Fault Isolation:** Failures are contained within a single service.

- **Cons of Microservices:**

- **Complexity:** Increased operational complexity due to managing multiple services.

- **Latency:** Higher network latency due to communication between services.

- **Consistency:** Ensuring data consistency across distributed services is harder.

- **Pros of Monolithic Architecture:**

- **Simplicity:** Easier to develop, test, and deploy as a single application.

- **Performance:** Lower latency as components interact within the same process.

- **Cons of Monolithic Architecture:**

- **Scalability:** Harder to scale individual parts of the system.

- **Maintenance:** As the system grows, it becomes harder to maintain and evolve.Another example of trade-off analysis might be a table with the Fibonacci sequence where you can evaluate options:

| Factor | Option 1: Microservices | Options: Monolithic | Multiplier |

|---|---|---|---|

| Ease of Scaling Individual Components | 8 | 2 | 2 |

| Overall System Operational Complexity | 2 | 8 | 2 |

| Ability to Isolate Failures to Specific Components | 8 | 2 | 1 |

| Impact of Network Latency on Inter-Component Communication | 2 | 8 | 1 |

| Flexibility in Independent Deployment of Services | 8 | 3 | 1 |

| Ease of Maintaining Data Consistency Across Components | 2 | 8 | 1 |

| Initial Development and Deployment Simplicity | 3 | 8 | 1 |

| System Performance without Network Overhead | 3 | 8 | 1 |

| Long-term Maintenance of Growing System | 8 | 2 | 1 |

| Risk of Deployment Affecting Entire System | 8 | 2 | 1 |

| Total | 70 | 63 |

Multiplier, in this case is an indicator of importance for business each of factor.

10. 🖺 API Documentation

What is API Documentation?

API documentation is crucial for describing how systems communicate through APIs, especially in service-oriented and microservices architectures. It ensures that internal and external consumers understand how to interact with services effectively.

Why API Documentation Matters:

- Clarity in Communication: Clear documentation helps developers understand API endpoints, request/response formats, and expected behavior.

- Consistency Across Teams: Standardized documentation ensures that different teams can integrate and consume APIs efficiently.

- Easier Onboarding: New developers can quickly understand how to use APIs without diving deep into the code.

- Contract Integrity: It helps ensure that APIs adhere to contracts, avoiding breaking changes that could affect consumers.

How to Document APIs:

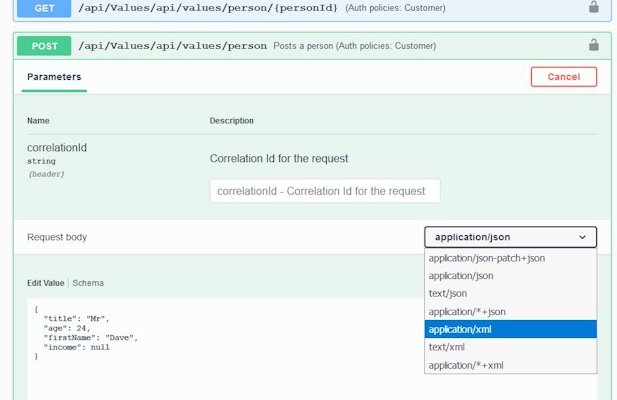

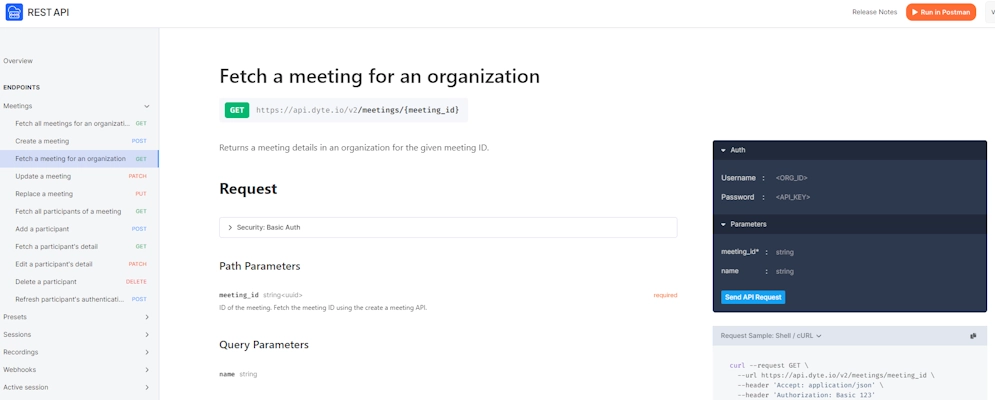

- Tools for API Documentation: Use tools like Swagger, OpenAPI, or Postman to generate and maintain clear, interactive API documentation (see all lists below)

- Endpoint Details: Document all API endpoints, including HTTP methods (GET, POST, PUT, DELETE), paths, and query parameters.

- Request and Response Formats: Include detailed descriptions of request bodies, headers, and response types (JSON, XML, etc.).

- Error Handling: Document error codes, messages, and recovery suggestions.

- Versioning: State API versions, especially if breaking changes are introduced.

- Authentication and Authorization: Describe how users authenticate (e.g., OAuth, API keys) and what permission levels are required for different actions.

Tools for Generate API Documentation:

Swagger / OpenAPI

- Swagger is one of the most widely used tools for designing and documenting RESTful APIs. It provides an interactive documentation interface that allows users to test API endpoints directly.

- OpenAPI is the underlying standard that Swagger follows for API documentation.

- OpenAPI Specification

Postman

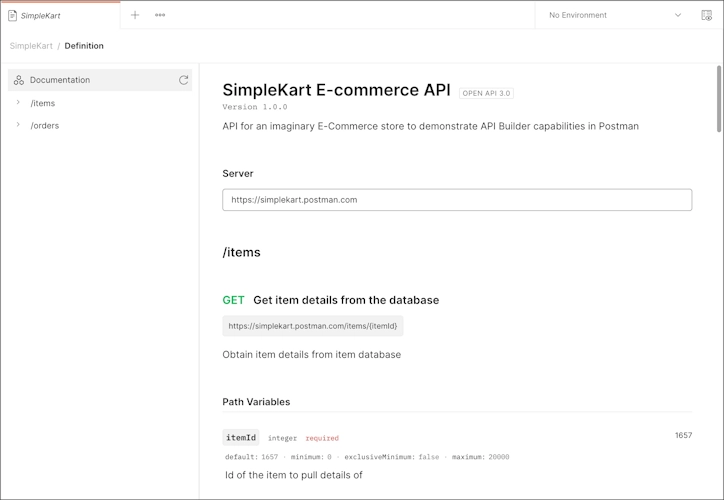

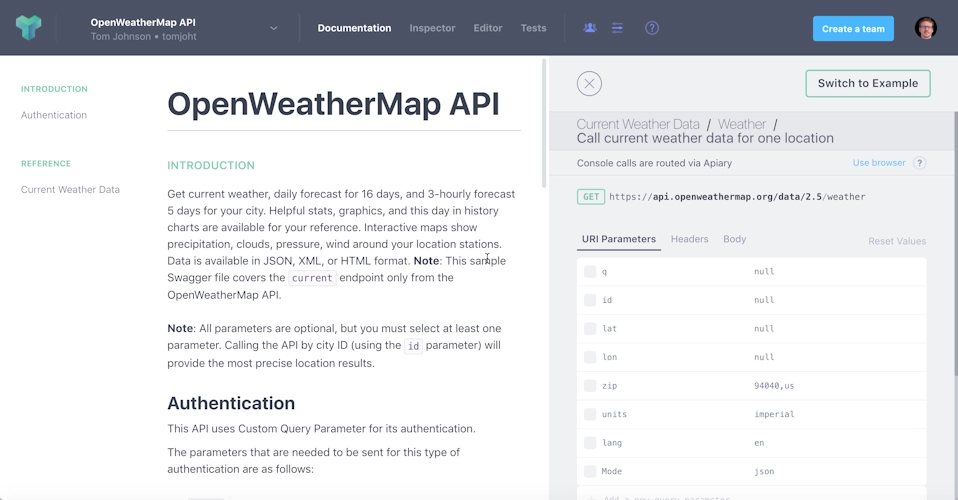

- Postman is a collaboration platform for API development that also provides tools for creating API documentation. It allows you to share interactive API docs and testing environments.

Redoc

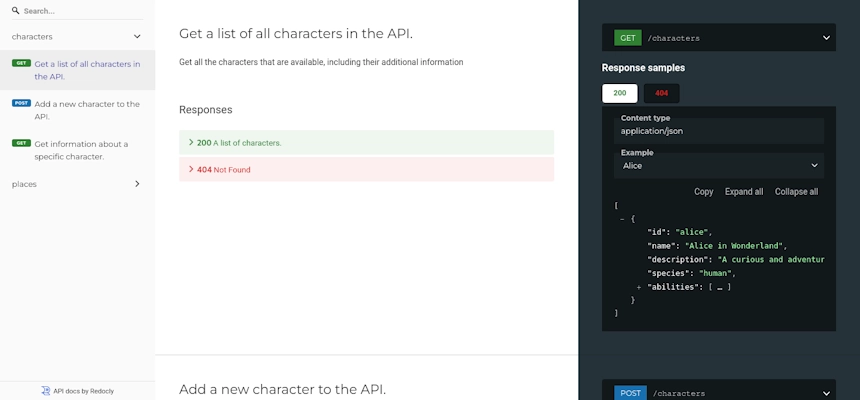

- Redoc is another tool for generating API documentation from OpenAPI (Swagger) definitions. It provides a clean, responsive, and interactive UI.

Docusaurus

- Docusaurus is a static site generator designed for documentation websites, often used in conjunction with Swagger or OpenAPI for API documentation.

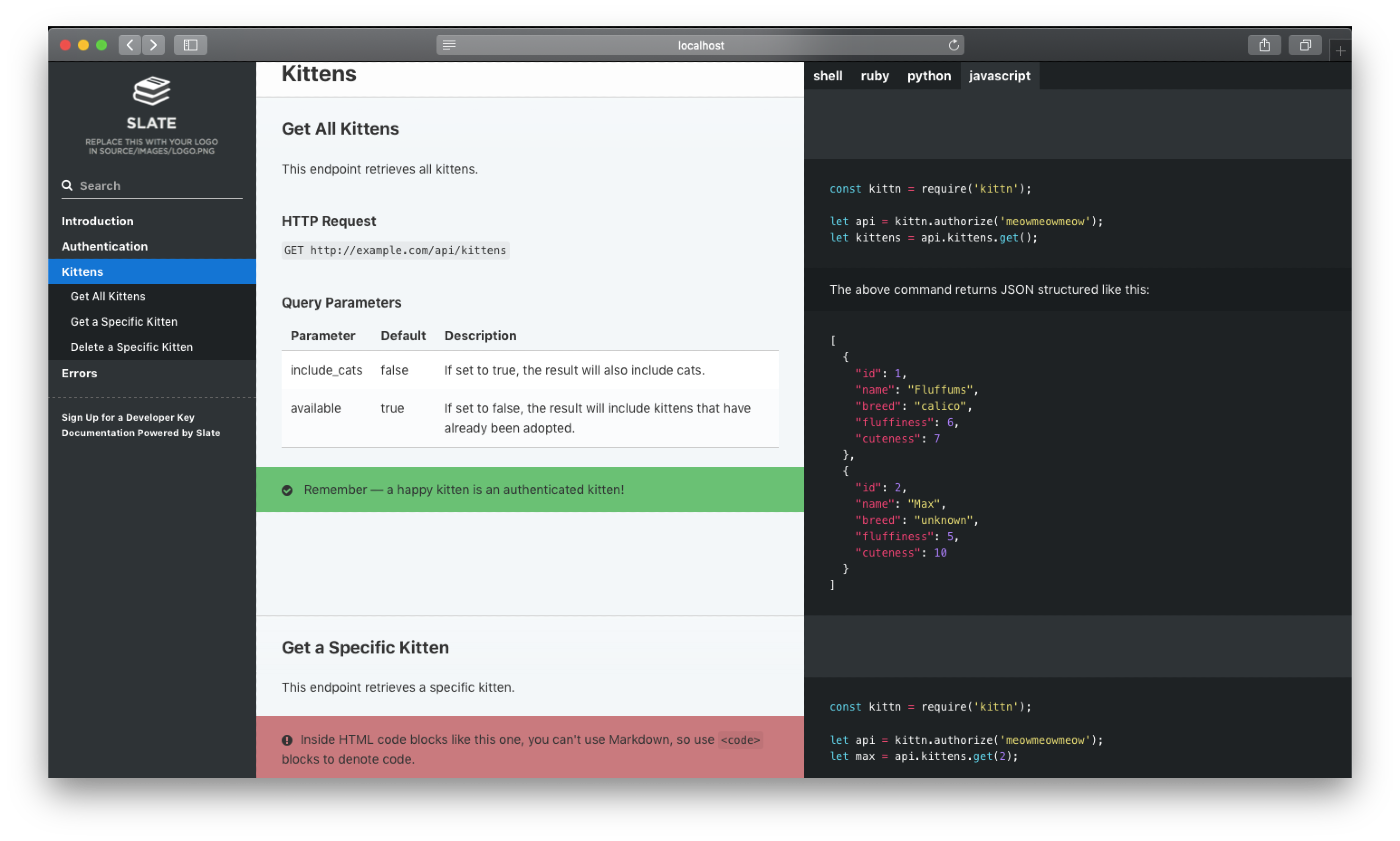

Slate

- Slate is an API documentation tool that generates beautiful, responsive, three-column API documentation from Markdown files.

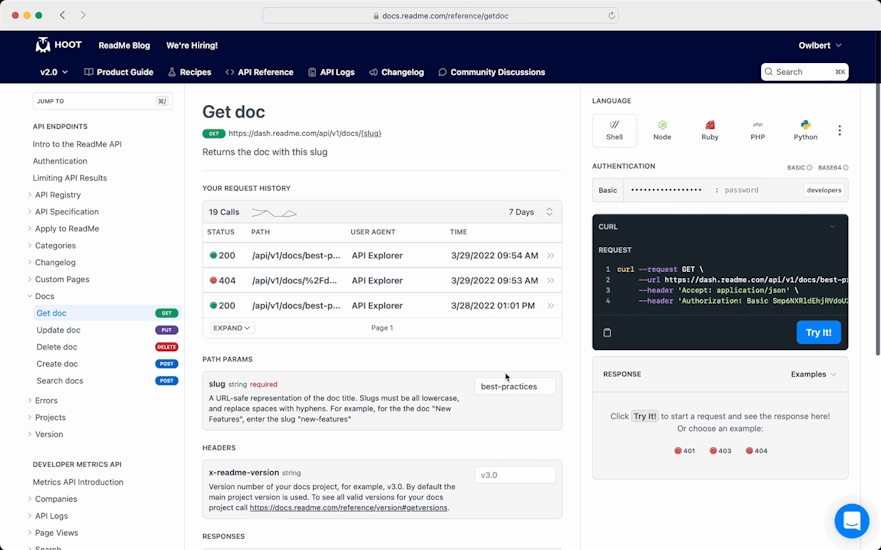

ReadMe

- ReadMe is a SaaS platform for creating beautiful and interactive API documentation, with features like analytics and versioning.

API Blueprint

- API Blueprint is a powerful high-level API description language that helps you create API documentation and mock servers. It’s an alternative to Swagger and OpenAPI.

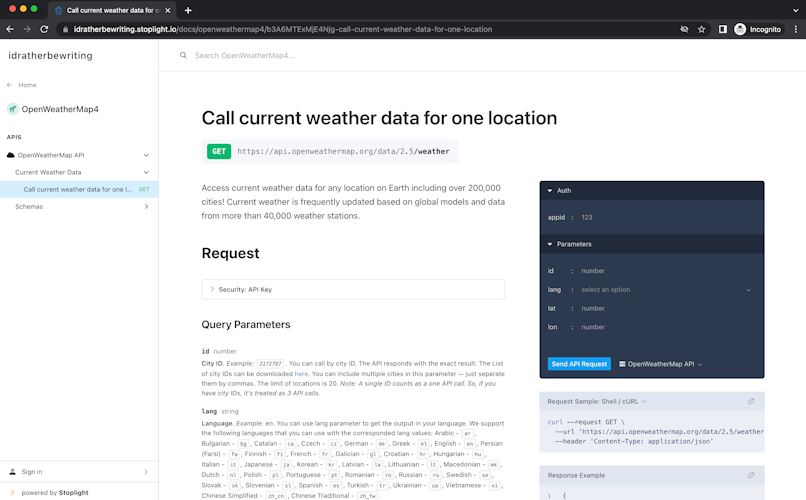

Stoplight

- Stoplight provides an API design platform that includes documentation, testing, and mocking features based on the OpenAPI specification.