6 Steps to scale your application in the cloud

Scaling an application in the cloud can seem complicated, but it becomes a manageable task with the right approach. This article outlines six simple steps to help you effectively scale 📈 your application, ensuring it can handle increased demand and continue to perform optimally. By leveraging cloud resources, you'll learn how to enhance your application's capabilities, improve resilience, and prepare for future growth.

Step 1: Start with Cloud-Native Managed Services

Why Start with Managed Services?

Starting with these cloud-native managed services from AWS, Azure, and GCP offers the following benefits:

- Ease of Use: Focus on application development while the cloud provider handles the heavy lifting of infrastructure management.

- Automatic Scaling: Services are designed to automatically scale with demand, ensuring performance without manual intervention.

- Built-In Security: Enjoy built-in security features and compliance certifications, reducing the complexity of maintaining security standards.

- Cost Efficiency: Pay for what you use, reducing operational costs associated with managing your infrastructure.

Cloud Service Cheat Sheet for Application Hosting and Database Services

| Cloud Provider | Service Type | Service Name | Description |

|---|---|---|---|

AWS | Application Hosting | AWS Elastic Beanstalk | PaaS for deploying and managing applications. |

| AWS App Runner | Fully managed service for containerized apps and APIs. | ||

| Database Service | Amazon RDS (Relational Database Service) | Managed relational DB supporting multiple engines. | |

| Amazon Aurora | High-performance MySQL/PostgreSQL-compatible relational DB. | ||

| Amazon DynamoDB | Fully managed NoSQL DB with seamless scalability. | ||

| Amazon DocumentDB | Managed document DB service compatible with MongoDB. | ||

| Amazon Neptune | Fully managed graph DB for highly connected data. | ||

| Amazon Timestream | Serverless time series DB for IoT and operations. | ||

| Amazon Redshift | Managed data warehouse with SQL support. | ||

| Amazon MemoryDB | Redis-compatible in-memory DB with low latency. | ||

Azure | Application Hosting | Azure App Service | PaaS for web apps, mobile backends, and APIs with auto-scaling. |

| Database Service | Azure SQL Database | Managed relational DB with self-optimization features. | |

| Azure Cosmos DB | Globally distributed, multi-model DB with low latency. | ||

| Azure Database for MySQL | Managed MySQL DB service. | ||

| Azure Database for PostgreSQL | Managed PostgreSQL DB with high availability. | ||

| Azure Managed Instance for Apache Cassandra | Managed Apache Cassandra clusters. | ||

| Azure Synapse Analytics | Unified analytics and data warehousing service. | ||

| Oracle Database on Azure | Support for running Oracle workloads in Azure. | ||

GCP | Application Hosting | Google App Engine | Fully managed PaaS with auto-scaling and load balancing. |

| Database Service | Google Cloud SQL | Managed relational DB with support for multiple engines. | |

| Google Cloud Spanner | Managed, scalable DB with strong consistency for global apps. | ||

| Google BigQuery | Serverless data warehouse with fast SQL queries. | ||

| Google Cloud Datastore | Scalable NoSQL DB for web and mobile apps. | ||

| Google Cloud Bigtable | NoSQL DB for large workloads. |

Step 2: Automate Scaling to Handle Any Traffic Surge

Enabling auto-scaling offers several key benefits:

- Automatic Resource Management: Auto-scaling ensures that your application and database services automatically adjust to handle changes in traffic, providing consistent performance without manual intervention.

- Cost Optimization: By scaling down resources during low-traffic periods, auto-scaling helps reduce costs by ensuring you only pay for the needed resources.

- Improved Reliability and Availability: Auto-scaling helps maintain application availability during unexpected traffic spikes by automatically provisioning additional resources to meet demand.

Here are cloud services that help you to enable autoscaling for your apps:

Amazon Web Services

- AWS Elastic Beanstalk Auto Scaling: Automatically scales your application up or down based on metrics like CPU utilization or request count.

- AWS App Runner Auto Scaling: Automatically adjusts the number of running instances to meet your application’s traffic demands.

Microsoft Azure

- Azure App Service Autoscale: Automatically scales your web apps in response to demand. You can configure rules based on CPU usage, memory usage, and other metrics.

Google Cloud Platform

- Google App Engine Auto Scaling: Automatically scales your application up and down based on traffic. App Engine provides flexible auto-scaling based on request rate, response latencies, and other factors.

Step 3: Reduce database load and improve response times with caching solutions.

Caching is a critical strategy for optimizing the performance of your cloud-native applications. By storing frequently accessed data in a cache, you can significantly reduce the load on your databases, decrease latency, and improve the overall user experience.

Cache services in the cloud providers:

- Amazon ElastiCache: A fully managed in-memory data store service supporting Redis and Memcached. It provides sub-millisecond latency, making it ideal for caching frequently accessed data.

- Azure Cache for Redis: A fully managed in-memory cache that supports Redis, providing high throughput and low latency access to data, which is crucial for applications requiring quick data retrieval.

- Google Cloud Memorystore: A fully managed in-memory data store service for Redis, offering low-latency access to cached data and simplifying the process of scaling caching infrastructure.

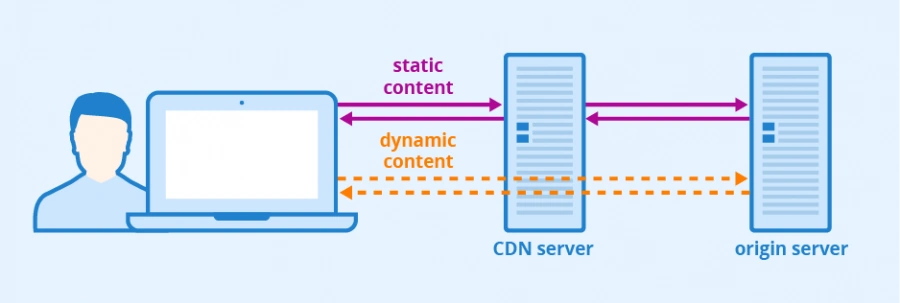

In addition, you can apply cache not only on the Data layer, for example for static files you can Apply CDN solutions.

Content Delivery Network (CDN) Caching

CDNs cache static content (such as images, videos, and HTML files) at edge locations around the world, ensuring that users can access content quickly, regardless of their geographical location. This reduces the load on your servers and speeds up content delivery to end users.

- Amazon CloudFront: A global CDN service that caches content at edge locations, providing low-latency access to static and dynamic web content.

- Azure CDN: A global content delivery network service that caches content at strategically placed edge nodes, optimizing content delivery speed and reducing latency.

- Google Cloud CDN: A global CDN service that caches content at Google’s edge locations, reducing latency and speeding up the delivery of static and dynamic content.

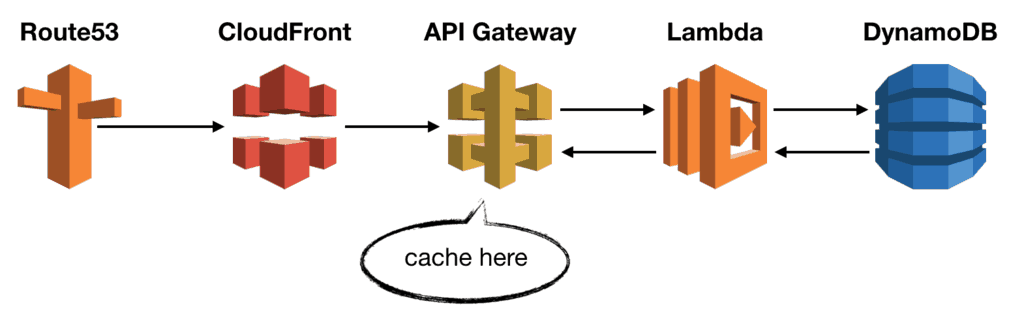

API Gateway-Level Caching

API gateway-level caching involves storing frequently accessed data, such as session data or the results of expensive calculations, directly within the Gateway layer. This reduces the need for repeated database queries and computational overhead.

- AWS API Gateway Caching: This enables you to cache the results of API requests at the gateway level, reducing the need for repeated backend processing and database queries.

- Azure API Management Caching: Allows you to cache responses from your APIs, reducing latency and improving performance for repeated requests.

- Google Cloud Endpoints Caching: Provides caching at the API gateway level, enabling faster response times and reduced load on backend services.

Step 4: Enhance Database Performance by Separating Read/Write Operations

As your application scales, the database can quickly become a bottleneck, especially when handling a high volume of transactions. To mitigate this, it's essential to separate read and write operations. This approach helps optimize database performance by distributing the load more efficiently, allowing your application to handle more concurrent users and larger datasets without compromising speed or reliability.

Key points why split DB may be beneficial for your solution:

- Improved Performance: By directing read queries to replicas, you reduce the load on the primary database, allowing it to handle write operations more efficiently.

- Scalability: As your application grows, read replicas and sharding allow you to scale horizontally, handling more users and larger datasets without a performance hit.

- High Availability: Read replicas and sharding improve the fault tolerance of your database architecture, ensuring that your application remains available even during high-traffic periods or in the event of a failure.

Read/Write Splitting

Read/write splitting involves directing read operations (such as SELECT queries) to read replicas and write operations (INSERT, UPDATE, and DELETE queries) to the primary database. This distribution of queries helps balance the load and improves the overall performance of the database. Usually, it's implemented via the CQRS pattern.

List of the cloud services that help to create separate Read DBs for your primary Database:

Amazon Web Services

- Amazon RDS Read Replicas: Allows you to create one or more replicas of a primary database, directing read traffic to these replicas, which reduces the load on the primary instance.

- Amazon Aurora Read Replicas: Supports up to 15 read replicas, which can be used to offload read traffic from the primary database, improving performance and fault tolerance.

Microsoft Azure

- Azure SQL Database Geo-Replication: This enables you to create read-only replicas of your SQL database in different regions, directing read traffic to these replicas to reduce load on the primary database.

- Azure Cosmos DB Global Distribution: Automatically replicates your data across multiple regions, allowing you to read traffic to the nearest replica, reducing latency and load on the primary database.

Google Cloud Platform

- Google Cloud SQL Read Replicas: Allows to creation of read replicas of your Cloud SQL database, directing read queries to these replicas to balance the load.

- Google Cloud Spanner Multi-Region Configuration: Distributes data across multiple regions, enabling you to direct read operations to the nearest replica, optimizing performance and availability.

Database Sharding

For extensive datasets or applications with extremely high traffic, database sharding (horizontal partitioning) may be necessary. Sharding involves splitting your database into smaller, more manageable pieces (shards), each of which can be hosted on a separate database instance. This allows you to scale horizontally, improving both performance and availability. This article might help you understand more about sharding options.

Step 5: Transition to a Service-Oriented Architecture / Microservices

As your application grows, transitioning from a monolithic architecture to a microservices-based architecture becomes crucial for managing complexity, improving scalability, and enabling faster development cycles. SoA / Microservices allow you to break down your application into smaller, independent services that can be developed, deployed, and scaled independently.

SoA / Microservices architecture involves decomposing a monolithic application into a collection of loosely coupled services, each responsible for a specific functionality. This approach offers several advantages:

- Independent Scaling: Each service can be scaled independently based on its specific resource requirements, leading to more efficient use of infrastructure.

- Decentralized Data Management: Each service can have a database optimized for its specific needs, which helps reduce cross-service dependencies and improve performance.

- Enhanced Agility: Development teams can work on different microservices simultaneously, allowing faster development, testing, and deployment cycles.

Cloud hosting options for Microservices

Amazon Web Services

- AWS Lambda: A serverless computing service that lets you run code in response to events without provisioning or managing servers. Ideal for building lightweight microservices.

- Amazon Elastic Kubernetes Service (EKS): A fully managed Kubernetes service that makes it easy to deploy, manage, and scale containerized microservices using Kubernetes.

- Amazon Elastic Container Service (ECS): A fully managed container orchestration service that supports Docker containers, enabling you to run and scale containerized microservices.

- AWS API Gateway: A fully managed service that allows you to create, publish, maintain, monitor, and secure APIs, enabling communication between microservices.

Microsoft Azure

- Azure Functions: A serverless computing service that enables you to run event-driven code without having to provision or manage infrastructure, making it easy to build microservices.

- Azure Kubernetes Service (AKS): A fully managed Kubernetes service that simplifies deploying, managing, and scaling containerized applications.

- Azure Container Instances (ACI): A service that allows you to run containers without managing virtual machines or orchestration, providing a quick way to deploy microservices.

- Azure API Management: A fully managed API gateway that enables you to create and manage modern API gateways for backend services hosted anywhere.

Google Cloud Platform

- Google Cloud Functions: A serverless execution environment for building and connecting cloud services, ideal for lightweight microservices.

- Google Kubernetes Engine (GKE): A managed Kubernetes service that allows you to deploy, manage, and scale containerized applications using Kubernetes.

- Google Cloud Run: A fully managed compute platform that automatically scales your stateless containers, providing an easy way to run and scale microservices.

- Google Cloud Endpoints: A fully managed API gateway that helps you secure and monitor APIs, enabling microservices to communicate securely and efficiently.

Step 6: Leverage Event-Driven Architecture

Why Leverage Event-Driven Architecture?

Adopting an event-driven architecture offers several key benefits:

- Scalability: By decoupling services and handling events asynchronously, your application can scale more efficiently and handle high traffic volumes without bottlenecks.

- Resilience: Event-driven architectures are inherently resilient, as services can continue operating even if other parts of the system are down or experiencing delays.

- Flexibility: Easily add new features or services without impacting the entire system, allowing for more agile development and faster time-to-market.

Cheat sheet with message broker use cases:

| Cloud Provider | Message Broker | Description | Use Cases | Key Features |

|---|---|---|---|---|

| AWS | Amazon SQS | Simple Queue Service that offers reliable, highly scalable hosted queues for storing messages. | Task queues, decoupling microservices, async processing | FIFO and Standard queues, Dead-letter queues, Serverless |

| Amazon SNS | Simple Notification Service for pub/sub messaging, enabling event-driven, serverless applications. | Pub/Sub messaging, event notifications, fan-out messaging | Topics, multi-protocol delivery, Message Filtering | |

| Amazon MQ | Managed message broker service for Apache ActiveMQ and RabbitMQ. | Enterprise messaging, migration from on-premise to cloud | Supports MQTT, AMQP, STOMP, JMS | |

| Amazon EventBridge | Serverless event bus service that connects application data from various sources to AWS services. | Event-driven microservices, integrating SaaS apps with AWS | Event buses, Schema Registry, Event routing | |

| Amazon Kinesis | Managed service for real-time data streaming. | Real-time analytics, event streaming, log and event ingestion | Streams, Firehose, Analytics, Video Streams | |

| Azure | Azure Service Bus | Fully managed enterprise message broker with native integration to Azure services. | Reliable message queuing, pub/sub messaging, event-driven apps | FIFO with ordered delivery, Dead-letter queues, Geo-disaster recovery |

| Azure Event Hubs | Big data streaming platform and event ingestion service for real-time analytics. | Telemetry, log collection, data streaming, IoT scenarios | High-throughput, partitioning, integration with analytics | |

| Azure Event Grid | Fully managed event routing service for uniform event consumption. | Event-driven apps, serverless automation, real-time updates | Event filtering, advanced routing, pay-per-event model | |

| Azure Queue Storage | Simple message queue service for storing large numbers of messages accessible via HTTP/HTTPS. | Task queues, background job processing, decoupling services | High durability, cost-effective, integration with other Azure services | |

| Azure HDInsight | Managed cloud Hadoop service that provides support for Apache Kafka, a distributed streaming platform. | Real-time analytics, data integration, event-driven data processing | Kafka compatibility, managed clusters, big data support | |

| Azure SignalR Service | Managed service that simplifies adding real-time web functionalities to applications. | Real-time chat, live dashboards, instant notifications | Persistent connections, scale-out options, integrated security | |

| GCP | Google Pub/Sub | Global, fully managed messaging service for asynchronous messaging and event-driven architectures. | Real-time analytics, event streaming, reliable messaging | Global delivery, automatic scaling, message filtering |

| Google Cloud Tasks | Fully managed service for executing tasks at a later time, with delayed task execution support. | Task queues, deferred processing, serverless execution | Retry policies, rate limiting, task management |

Event-Driven Patterns and Use Cases

Event-driven architectures can be applied to a wide range of scenarios, including:

- Microservices Communication: Use events to trigger actions across microservices, such as sending an email after a user signs up or updating inventory after a purchase.

- Real-Time Data Processing: Stream and process data in real time, such as monitoring IoT sensor data, analyzing financial transactions for fraud, or processing social media feeds.

- Decoupling Monolithic Applications: Gradually decouple a monolithic application into smaller services by introducing an event-driven architecture that allows components to communicate asynchronously.

- Workflow Automation: Automate complex workflows and business processes by chaining together multiple event-driven services, ensuring that the previous step's completion triggers each step in a process.

Key Takeaways

- Start with Managed Services: Leverage cloud-native managed services for both application hosting and databases to minimize infrastructure management and optimize scalability from the outset.

- Automate Scaling: Implement auto-scaling to automatically adjust resources in response to demand, ensuring your application remains responsive and cost-effective.

- Optimize with Caching: Use intelligent caching strategies to reduce database load and improve application performance, ensuring fast response times for your users.

- Enhance Database Performance: Separate read and write operations using read replicas and sharding to distribute the load and maintain high performance under heavy traffic.

- Adopt Microservices Architecture: Transition to a microservices-based architecture to improve agility, scalability, and resilience by decoupling application components.

- Implement Event-Driven Architecture: Use an event-driven approach to enable asynchronous communication between services, enhancing scalability, flexibility, and fault tolerance.